def pool_forward(A_prev, hparameters, mode = "max"):

"""

Implements the forward pass of the pooling layer

Arguments:

A_prev -- Input data, numpy array of shape (m, n_H_prev, n_W_prev, n_C_prev)

hparameters -- python dictionary containing "f" and "stride"

mode -- the pooling mode you would like to use, defined as a string ("max" or "average")

Returns:

A -- output of the pool layer, a numpy array of shape (m, n_H, n_W, n_C)

cache -- cache used in the backward pass of the pooling layer, contains the input and hparameters

"""

# Retrieve dimensions from the input shape

(m, n_H_prev, n_W_prev, n_C_prev) = A_prev.shape

# Retrieve hyperparameters from "hparameters"

f = hparameters["f"]

stride = hparameters["stride"]

# Define the dimensions of the output

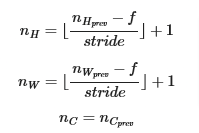

n_H = int(1 + (n_H_prev - f) / stride)

n_W = int(1 + (n_W_prev - f) / stride)

n_C = n_C_prev

# Initialize output matrix A

A = np.zeros((m, n_H, n_W, n_C))

for i in range(0,m): # loop over the training examples

for h in range(0,n_H): # loop on the vertical axis of the output volume

for w in range(0,n_W): # loop on the horizontal axis of the output volume

for c in range (0,n_C): # loop over the channels of the output volume

# Find the corners of the current "slice" (≈4 lines)

vert_start = h*stride

vert_end = vert_start + f

horiz_start = w*stride

horiz_end = horiz_start + f

# Use the corners to define the current slice on the ith training example of A_prev, channel c. (≈1 line)

a_prev_slice = A_prev[i,vert_start:vert_end,horiz_start:horiz_end,c]

# Compute the pooling operation on the slice. Use an if statment to differentiate the modes. Use np.max/np.mean.

if mode == "max":

A[i, h, w, c] = np.max(a_prev_slice)

elif mode == "average":

A[i, h, w, c] = np.mean(a_prev_slice)

# Store the input and hparameters in "cache" for pool_backward()

cache = (A_prev, hparameters)

# Making sure your output shape is correct

assert(A.shape == (m, n_H, n_W, n_C))

return A, cachePooling Layers

Pooling layers make the features that detect more robust.

The pooling (POOL) layer reduces the height and width of the input. It helps reduce computation, as well as helps make feature detectors more invariant to its position in the input. So if a 9 is detected it extracts it and transfers it. The two types of pooling layers are:

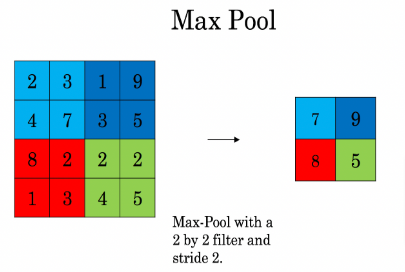

Max Pooling

- Max-pooling layer: slides an (f,f) window over the input and stores the max value of the window in the output.

- You transform a 4x4 into a 2x2 matrix

- It has no parameters to learn, it just resizes down the input

Example:

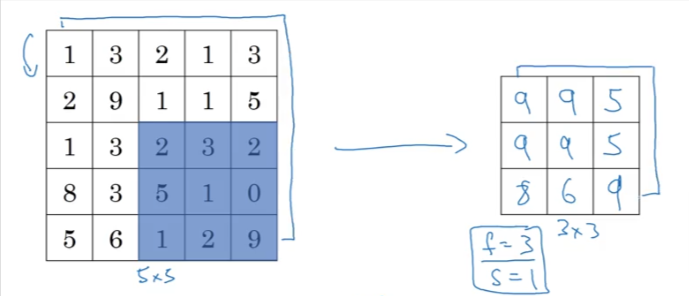

- If we use Max Pooling on a 5x5 we get a 3x3 with f=3, and s=1

- If we had a 3D input we still have the same size 3x3 output but with a 3D for the channels

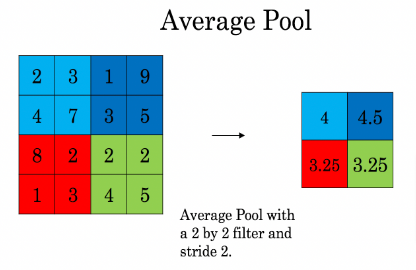

Average Pooling

- Average-pooling layer: slides an (f,f) window over the input and stores the average value of the window in the output.

- You transform a 4x4 into a 2x2 matrix

- It has no parameters to learn, it just resizes down the input

- Is not used as much as Max Pooling - the exception is very deep in the NN

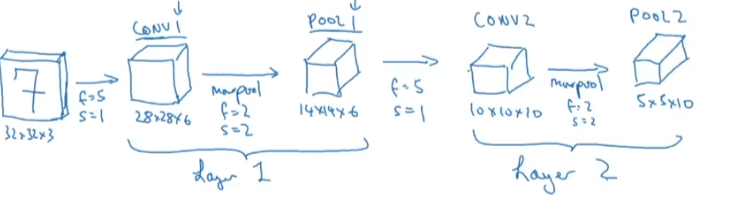

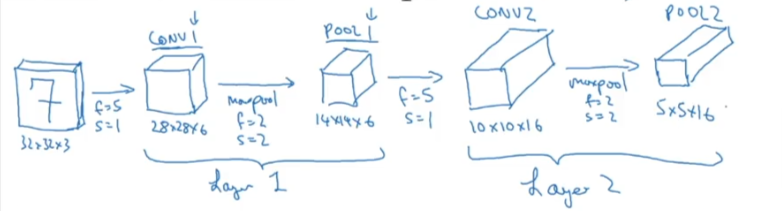

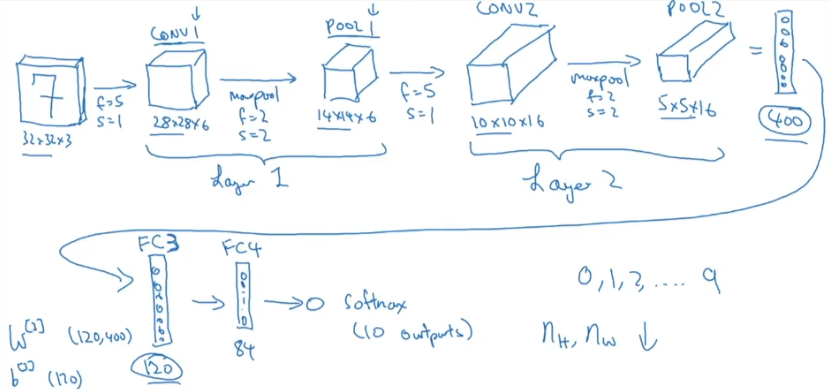

Since the Pooling layer has no parameters (just the hyperparameters) they are usually lumped together with the ConvNet layer as layer 1 as shown below in the example

Formulas

Example

- Digit recognition 7

- Input 32x32x3 with f=5, s=1

- Output into Conv1 is 28x28x6 which has 6 filters

- Apply a pooling 1 layer: f=2, s=2 -> 14x14x6 output

- Pooling layer has no parameters=weights than we will combine it as part of layer 1

- Apply another layer with f=5x5, s=1, filters=10

- Now we have 10x10x10 Conv2

- Apply a maxpool again: f=2, s=2 -> 5x5x10 volume output

- or we can change Conv2 to this

- Let’s fatten the output out into 400 units

- Now we will densily connect to another layer, we call it Fully Connected FC1

- Reason it is called Fully Connected is because each of the 400 units output is connected to a [120,400] unit, so it’s a 1 to 1 connection

- Add another FC4 of 84 units

- Feed it to a Softmax function with 10 outputs

- Channels will increase

- Dimensions decrease

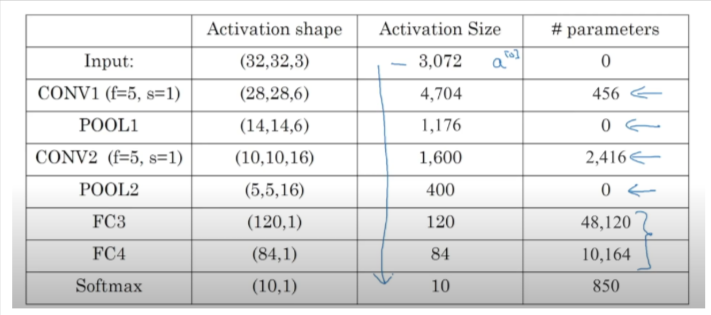

- Here is the breakdown of the shapes and sizes and # of parameters

Code

Using the formulas from above let’s show the Max and Average Pooling calculations

np.random.seed(1)

A_prev = np.random.randn(2, 4, 4, 3)

hparameters = {"stride" : 2, "f": 3}

A, cache = pool_forward(A_prev, hparameters)

print("mode = max")

print("A =", A)

print()

A, cache = pool_forward(A_prev, hparameters, mode = "average")

print("mode = average")

print("A =", A)mode = max

A = [[[[1.74481176 0.86540763 1.13376944]]]

[[[1.13162939 1.51981682 2.18557541]]]]

mode = average

A = [[[[ 0.02105773 -0.20328806 -0.40389855]]]

[[[-0.22154621 0.51716526 0.48155844]]]]