Create a detailed data architecture design for a hospital network.GenAI & Architecture Design

Objectives

Use generative AI to create an efficient data architecture design for the following scenarios:

Healthcare industry (EHR System):

Centralize patient information from various sources, strict data security, and robust analytics for clinical decision-making.

Retail industry (CRM System):

Consolidate customer data for personalized marketing, prioritize data security, and implement analytics for segmentation and recommendations.

EHR

A hospital network is implementing an electronic health records (EHR) system to centralize patient information, streamline medical records management, and improve patient care delivery. The data engineering team has developed the following considerations for the data architecture design.

Integration of data from various sources such as patient registration systems, laboratory systems, imaging systems, and medical devices.

Utilization of a secure and scalable data storage solution to store sensitive patient information.

Designing a comprehensive data model to represent patient demographics, medical history, diagnoses, treatments, and prescriptions.

Implementing strict data governance policies to ensure patient privacy, data security, regulatory compliance, and data quality standards.

Implementing robust access controls, encryption, and auditing mechanisms to protect patient data from unauthorized access or breaches.

Solution

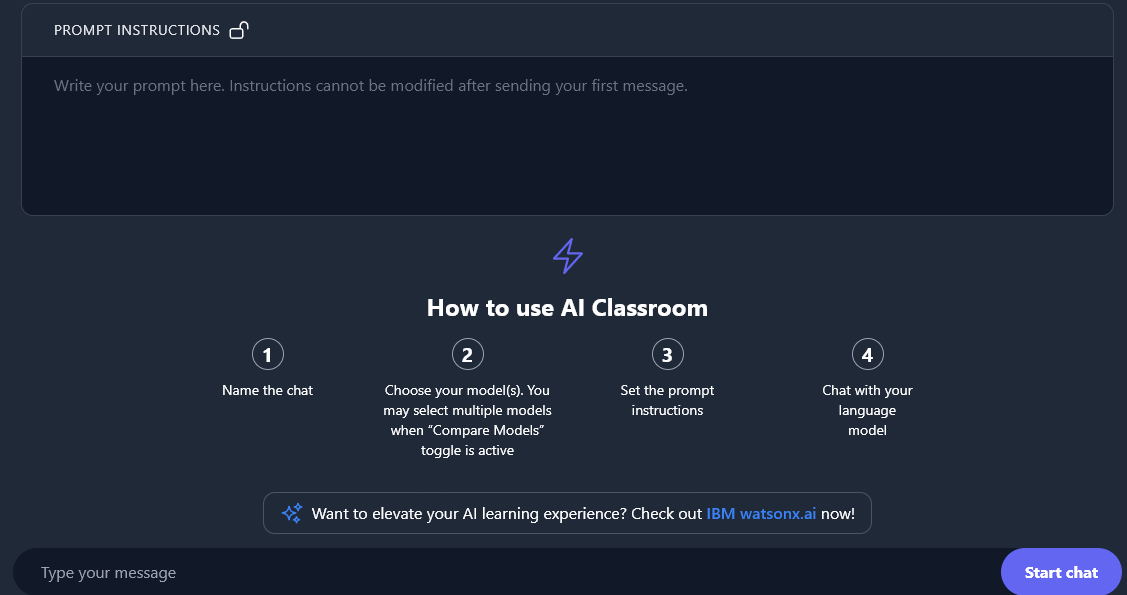

You may use the generative AI lab to incorporate these considerations to propose a detailed data architecture design plan. However, giving too much information to the model simultaneously might result in a biased response. So first, run the following prompt and check the response.

Input 1

Response 1

Here is a detailed data architecture design for a hospital network:

Overview

The hospital network is a complex system that requires a robust and scalable data architecture to support its various functions and services. The architecture should be designed to ensure data security, integrity, and compliance with regulatory requirements.

Components

Data Sources

Patient Data: Electronic Health Records (EHRs), Medical Records, and other patient data

Financial Data: Billing, Insurance, and Financial Management

Administrative Data: Patient Demographics, Insurance Claims, and Administrative Tasks

Operational Data: Equipment, Supplies, and Maintenance

Data Storage

Database: A relational database management system (RDBMS) such as Oracle, MySQL, or PostgreSQL to store patient data, financial data, and operational data

Data Warehouse: A data warehouse to store aggregated and transformed data for reporting and analytics

Data Processing

Data Ingestion: Data from various sources is ingested into the database using APIs, file transfers, or other data integration methods

Data Processing: Data is processed and transformed using ETL (Extract, Transform, Load) tools or other data processing technologies

Data Storage: Data is stored in the database and data warehouse

Data Security

Authentication: Authentication and authorization mechanisms to ensure access control and data protection

Encryption: Data encryption to protect sensitive information

Access Control: Role-based access control to restrict access to authorized personnel

Data Analytics

Reporting: Data analytics tools to generate reports and dashboards for decision-making

Predictive Analytics: Machine learning algorithms to predict patient outcomes and identify trends

Integration

APIs: APIs to integrate with external systems, such as billing and insurance companies

Web Services: Web services to integrate with other hospital systems, such as radiology and pharmacy

Monitoring and Maintenance

Monitoring: Real-time monitoring of system performance, security, and data quality

Maintenance: Automated maintenance and patching of the system

Data Architecture Components

Data Lake

A centralized repository for storing raw, unprocessed data

Data Lake is a NoSQL database that supports large amounts of unstructured and semi-structured data

Data Warehouse

A centralized repository for storing aggregated and transformed data

Data Warehouse is a relational database management system that supports data analysis and reporting

Data Mart

A separate database for storing specific types of data, such as patient demographics or billing data

Data Mart is a specialized database that supports data analysis and reporting

Data Lake Analytics

A data analytics platform for processing and analyzing large amounts of data

Data Lake Analytics is a cloud-based platform that supports data processing, data visualization, and machine learning

Data Integration Layer

A layer that integrates data from various sources and systems

Data Integration Layer is a messaging queue or event-driven architecture that supports data exchange between systems

Data Architecture Patterns

Event-Driven Architecture (EDA): A pattern that uses events to trigger data processing and decision-making

Service-Oriented Architecture (SOA): A pattern that uses services to expose data and functionality

Microservices Architecture: A pattern that breaks down the system into smaller, independent services that communicate with each other

Event-Driven Microservices: A pattern that uses events to trigger microservices and enable loose coupling

Security Considerations

Data Encryption: Data encryption is used to protect sensitive information

Access Control: Role-based access control is used to restrict access to authorized personnel

Authentication: Authentication and authorization mechanisms are used to ensure access control

Data Masking: Data masking is used to protect sensitive information

Regular Security Audits: Regular security audits are performed to identify vulnerabilities and ensure compliance with regulatory requirements

Scalability and Performance

Horizontal Scaling: Horizontal scaling is used to increase the number of nodes in the system

Load Balancing: Load balancing is used to distribute traffic across multiple nodes

Caching: Caching is used to reduce the load on the database and improve performance

Database Clustering: Database clustering is used to improve performance and scalability

Cloud-based Infrastructure: Cloud-based infrastructure is used to scale the system as needed

Monitoring and Maintenance

Monitoring: Real-time monitoring is performed to detect and respond to issues

Alerting: Alerting is used to notify personnel of issues and incidents

Maintenance: Automated maintenance is performed to ensure the system is running smoothly

Backup and Recovery: Regular backups are performed to ensure data availability

Input 2

Data modeling components for patient demographics, medical history, diagnosis, treatment, and quality standards are missing in the response generated. To include that, use the following prompt in the same chat.

Add data modeling components for patient demographics, medical history, diagnosis, treatment, and quality standards.Response 2

Here is an updated data architecture design with additional data modeling components for patient demographics, medical history, diagnosis, treatment, and quality standards:

Data Sources

Patient Data: Electronic Health Records (EHRs), Medical Records, and other patient data

Medical Records: Electronic Medical Records (EMRs), Medical Billing, and Insurance Data

Diagnosis and Treatment Data: Clinical Notes, Lab Results, and Imaging Data

Quality Standards: Quality Metrics, Patient Satisfaction Surveys, and Compliance Data

Data Storage

Database: A relational database management system (RDBMS) such as Oracle, MySQL, or PostgreSQL to store patient data, medical records, diagnosis, treatment, and quality standards

Data Warehouse: A data warehouse to store aggregated and transformed data for reporting and analytics

Data Lake: A NoSQL database that supports large amounts of unstructured and semi-structured data

Data Modeling Components

Patient Demographics

Patient ID: Unique identifier for each patient

Name: Full name of the patient

Date of Birth: Date of birth of the patient

Address: Patient’s address

Contact Information: Phone number, email, and other contact information

Medical History

Medical Record ID: Unique identifier for each medical record

Date of Birth: Date of birth of the patient

Medical History: List of previous medical conditions, allergies, and medications

Medical History Notes: Notes and comments about the patient’s medical history

Diagnosis

Diagnosis ID: Unique identifier for each diagnosis

Date of Diagnosis: Date of diagnosis

Diagnosis Code: ICD-10 or CPT code for the diagnosis

Description: Description of the diagnosis

Treatment: List of treatments and interventions for the diagnosis

Treatment

Treatment ID: Unique identifier for each treatment

Date of Treatment: Date of treatment

Treatment Type: Type of treatment (e.g. surgery, medication, etc.)

Treatment Notes: Notes and comments about the treatment

Quality Standards

Quality Metric ID: Unique identifier for each quality metric

Metric Name: Name of the quality metric

Metric Value: Value of the quality metric

Unit: Unit of measurement for the quality metric

Date: Date of measurement

Quality Standards Data

Quality Standard ID: Unique identifier for each quality standard

Standard Name: Name of the quality standard

Standard Description: Description of the quality standard

Standard Value: Value of the quality standard

Unit: Unit of measurement for the quality standard

Data Relationships

Patient Demographics and Medical History: Patient demographics and medical history are related to each other through the patient ID

Diagnosis and Treatment: Diagnosis and treatment are related to each other through the diagnosis ID and treatment ID

Quality Standards and Diagnosis: Quality standards are related to each other through the quality standard ID and diagnosis ID

Quality Standards and Treatment: Quality standards are related to each other through the quality standard ID and treatment ID

Data Integration

APIs: APIs are used to integrate data from various sources, such as EHRs, medical records, and quality standards

Web Services: Web services are used to integrate with other hospital systems, such as radiology and pharmacy

Data Ingestion: Data is ingested from various sources using APIs or file transfers

Data Analytics

Reporting: Reports are generated using data analytics tools to provide insights and information to healthcare professionals

Predictive Analytics: Machine learning algorithms are used to predict patient outcomes and identify trends

Quality Metrics: Quality metrics are used to measure the quality of care and identify areas for improvement

Security Considerations

Data Encryption: Data is encrypted to protect sensitive information

Access Control: Role-based access control is used to restrict access to authorized personnel

Authentication: Authentication and authorization mechanisms are used to ensure access control

Data Masking: Data is masked to protect sensitive information

Regular Security Audits: Regular security audits are performed to identify vulnerabilities and ensure compliance with regulatory requirements

Input 3

You can also see that the consideration of implementing robust access controls, encryption, and auditing mechanisms to protect patient data from unauthorized access or breaches is yet to be included. The same can be included using the following prompt.

The design includes implementing robust access controls, encryption, and auditing mechanisms to protect patient data from unauthorized access or breaches.Response 3

Here is an updated design that includes robust access controls, encryption, and auditing mechanisms to protect patient data from unauthorized access or breaches:

Access Controls

Role-Based Access Control (RBAC): Each user is assigned a role that determines their access to specific data and systems

User Authentication: Users are authenticated using a combination of username and password, or other authentication methods such as biometric authentication

Authorization: Access to data and systems is granted or denied based on the user’s role and the specific actions they are authorized to perform

Least Privilege Principle: Users are granted the minimum privileges necessary to perform their job functions

Multi-Factor Authentication (MFA): Users are required to provide a second form of verification, such as a code sent to their phone or a fingerprint scan, in addition to their username and password

Encryption

Data Encryption: All data is encrypted using a secure encryption algorithm, such as AES-256

Key Management: The encryption key is stored securely, using a secure key management system

Data at Rest: Data is encrypted at rest, using a secure storage system

Data in Transit: Data is encrypted in transit, using a secure communication protocol, such as HTTPS

Data at Rest and in Transit: Data is encrypted at rest and in transit, using a secure encryption algorithm

Auditing Mechanisms

Log Management: Logs are collected and stored for auditing purposes

Audit Trail: A record of all changes to data and systems is maintained

Access Logs: Access to data and systems is tracked using access logs

System Logs: System logs are collected and stored for auditing purposes

Regular Audits: Regular audits are performed to ensure compliance with regulatory requirements

Data Access Controls

Data Classification: Data is classified into different categories, such as public, private, and confidential

Data Access Controls: Access to sensitive data is restricted to authorized personnel

Data Encryption: Data is encrypted when in transit and at rest

Access Controls: Access to data is restricted to authorized personnel, using role-based access control and multi-factor authentication

Data Deletion: Data is deleted when no longer needed, using a secure deletion process

Data Storage

Data Warehouse: A data warehouse is used to store aggregated and transformed data for reporting and analytics

Data Lake: A NoSQL database is used to store large amounts of unstructured and semi-structured data

Data Encryption: Data is encrypted when in transit and at rest

Data Access: Data is accessed using a secure access control system

Data Security

Vulnerability Management: Vulnerabilities are identified and patched using a vulnerability management system

Patch Management: Patches are applied to systems and software to ensure security

Security Monitoring: Security is monitored using a security monitoring system

Incident Response: An incident response plan is in place to respond to security incidents

Compliance: Compliance with regulatory requirements is ensured through regular audits and risk assessments

Data Protection

Data Backup: Data is backed up regularly using a secure backup system

Data Recovery: Data is recovered in the event of a disaster or data loss

Data Retention: Data is retained for a specified period of time, using a data retention policy

Data Disposal: Data is disposed of securely, using a secure disposal process

Data Destruction: Data is destroyed using a secure destruction process

Security Governance

Security Policy: A security policy is developed and enforced

Security Training: Security training is provided to personnel

Security Awareness: Security awareness is promoted among personnel

Incident Response: An incident response plan is in place to respond to security incidents

Compliance: Compliance with regulatory requirements is ensured through regular audits and risk assessments

CRM

A retail company is developing a customer relationship management (CRM) system to enhance customer engagement, personalize marketing efforts, and improve sales performance. The following data architecture design considerations have to be planned for.

Integration of customer data from multiple touchpoints such as online transactions, in-store purchases, website interactions, and social media platforms.

Implement a centralized data warehouse or customer data platform (CDP) to consolidate customer information and facilitate real-time access.

Creation of a unified customer profile with attributes such as demographics, purchase history, browsing behavior, preferences, and interactions.

Implement measures to protect customer data integrity and confidentiality, including encryption, role-based access controls, and data masking techniques.

Integration of analytics tools for customer segmentation, predictive modeling, campaign performance analysis, and personalized recommendations.

Solution

Start with a basic prompt, as before, and ask the Generative AI model to create a proposed data architecture design for a retail company’s CRM.

Input 1

Create a detailed data architecture design for a retail company's customer relationship management system.Response 1

Here is a detailed data architecture design for a retail company’s customer relationship management (CRM) system:

System Requirements

The CRM system will be used to manage customer interactions, sales, and customer data across multiple channels (e.g. online, phone, in-store).

The system will be scalable, secure, and reliable.

The system will support multiple customer segments (e.g. individual, business, loyalty program members).

The system will integrate with various third-party services (e.g. payment gateways, marketing automation tools).

Database Design

The CRM system will be built using a relational database management system (RDBMS) such as MySQL or PostgreSQL. The database will consist of the following tables:

Customers

id(primary key): unique identifier for each customername: customer nameemail: customer email addressphone: customer phone numberaddress: customer addresscity: customer citystate: customer statezip: customer zip codecountry: customer countrycreated_at: timestamp for when the customer was createdupdated_at: timestamp for when the customer was last updated

Orders

id(primary key): unique identifier for each ordercustomer_id(foreign key): references theidin theCustomerstableorder_date: timestamp for when the order was placedtotal: total cost of the orderstatus: order status (e.g. “pending”, “shipped”, “delivered”, “cancelled”)created_at: timestamp for when the order was createdupdated_at: timestamp for when the order was last updated

Products

id(primary key): unique identifier for each productname: product namedescription: product descriptionprice: product pricequantity: product quantitycreated_at: timestamp for when the product was createdupdated_at: timestamp for when the product was last updated

Order Items

id(primary key): unique identifier for each order itemorder_id(foreign key): references theidin theOrderstableproduct_id(foreign key): references theidin theProductstablequantity: quantity of the product in the ordercreated_at: timestamp for when the order item was createdupdated_at: timestamp for when the order item was last updated

Marketing Campaigns

id(primary key): unique identifier for each marketing campaignname: marketing campaign namedescription: marketing campaign descriptioncreated_at: timestamp for when the campaign was createdupdated_at: timestamp for when the campaign was last updated

Loyalty Program

id(primary key): unique identifier for each loyalty program membername: loyalty program namedescription: loyalty program descriptioncreated_at: timestamp for when the loyalty program was createdupdated_at: timestamp for when the loyalty program was last updated

Customer Segments

id(primary key): unique identifier for each customer segmentname: customer segment namedescription: customer segment descriptioncreated_at: timestamp for when the segment was createdupdated_at: timestamp for when the segment was last updated

Data Flow

Customer Onboarding: When a new customer signs up, their information is inserted into the

Customerstable.Order Creation: When a customer places an order, the order is inserted into the

Orderstable.Order Item Creation: When an order item is added to an order, the order item is inserted into the

Order Itemstable.Marketing Campaign Creation: When a marketing campaign is created, the campaign is inserted into the

Marketing Campaignstable.Loyalty Program Creation: When a loyalty program is created, the loyalty program is inserted into the

Loyalty Programtable.Customer Segment Creation: When a customer segment is created, the segment is inserted into the

Customer Segmentstable.

Security and Authentication

User Authentication: Users are authenticated using a username and password.

Authorization: Users are granted access to specific data based on their role (e.g. customer, sales associate, manager).

Data Encryption: All data is encrypted using SSL/TLS to ensure secure transmission and storage.

Input 2

This response is missing the consideration of creating a unified customer profile with attributes such as demographics, purchase history, browsing behavior, preferences, and interactions. You can include that using the following prompt.

In addition to the response, include steps for creating a unified customer profile with attributes such as demographics, purchase history, browsing behavior, preferences, and interactions.Response 2

Here is an updated detailed data architecture design for a retail company’s customer relationship management (CRM) system, including steps for creating a unified customer profile with attributes such as demographics, purchase history, browsing behavior, preferences, and interactions:

System Requirements

The CRM system will be used to manage customer interactions, sales, and customer data across multiple channels (e.g. online, phone, in-store).

The system will be scalable, secure, and reliable.

The system will support multiple customer segments (e.g. individual, business, loyalty program members).

The system will integrate with various third-party services (e.g. payment gateways, marketing automation tools).

Database Design

The CRM system will be built using a relational database management system (RDBMS) such as MySQL or PostgreSQL. The database will consist of the following tables:

Customers

id(primary key): unique identifier for each customername: customer nameemail: customer email addressphone: customer phone numberaddress: customer addresscity: customer citystate: customer statezip: customer zip codecountry: customer countrycreated_at: timestamp for when the customer was createdupdated_at: timestamp for when the customer was last updated

Orders

id(primary key): unique identifier for each ordercustomer_id(foreign key): references theidin theCustomerstableorder_date: timestamp for when the order was placedtotal: total cost of the orderstatus: order status (e.g. “pending”, “shipped”, “delivered”, “cancelled”)created_at: timestamp for when the order was createdupdated_at: timestamp for when the order was last updated

Products

id(primary key): unique identifier for each productname: product namedescription: product descriptionprice: product pricequantity: product quantitycreated_at: timestamp for when the product was createdupdated_at: timestamp for when the product was last updated

Order Items

id(primary key): unique identifier for each order itemorder_id(foreign key): references theidin theOrderstableproduct_id(foreign key): references theidin theProductstablequantity: quantity of the product in the ordercreated_at: timestamp for when the order item was createdupdated_at: timestamp for when the order item was last updated

Marketing Campaigns

id(primary key): unique identifier for each marketing campaignname: marketing campaign namedescription: marketing campaign descriptioncreated_at: timestamp for when the campaign was createdupdated_at: timestamp for when the campaign was last updated

Loyalty Program

id(primary key): unique identifier for each loyalty program membername: loyalty program namedescription: loyalty program descriptioncreated_at: timestamp for when the loyalty program was createdupdated_at: timestamp for when the loyalty program was last updated

Customer Segments

id(primary key): unique identifier for each customer segmentname: customer segment namedescription: customer segment descriptioncreated_at: timestamp for when the segment was createdupdated_at: timestamp for when the segment was last updated

Customer Profiles

id(primary key): unique identifier for each customer profilecustomer_id(foreign key): references theidin theCustomerstabledemographics: customer demographics (e.g. age, location, income)purchase_history: customer purchase history (e.g. order history, browsing history)browsing_behavior: customer browsing behavior (e.g. search history, browsing patterns)preferences: customer preferences (e.g. favorite products, favorite brands)interactions: customer interactions (e.g. emails, phone calls, in-store visits)created_at: timestamp for when the customer profile was createdupdated_at: timestamp for when the customer profile was last updated

Data Flow

Customer Onboarding: When a new customer signs up, their information is inserted into the

Customerstable.Order Creation: When a customer places an order

Finance

A financial institution is developing a trading and risk management system to optimize investment strategies, monitor market risks, and ensure regulatory compliance. The following considerations for the data architecture design have been decided.

Integration of market data feeds, trading platforms, transaction systems, and risk databases to capture real-time market events and trading activities.

Deployment of a high-performance data storage infrastructure (for example, in-memory databases and distributed file systems) to handle large volumes of transactional and historical data.

Designing complex data models to represent financial instruments, trading positions, market data, risk factors, and regulatory metrics (for example, VaR and stress testing).

Implement stringent data governance policies to maintain data quality, accuracy, and consistency across trading systems and risk models.

Integration of advanced analytics tools for portfolio optimization, risk analysis, scenario modeling, and regulatory reporting (for example, Basel III, Dodd-Frank).

Use the Generative AI system with appropriate prompts to design a data architecture for the specifications provided.

Solution

Input 1

Create a detailed data architecture design for a trading and risk management system for a financial institutionResponse 1

Here is a detailed data architecture design for a trading and risk management system for a financial institution:

System Overview

The trading and risk management system is a cloud-based, multi-tenant, scalable, and secure system designed to manage the trading and risk management activities of a financial institution. The system will provide real-time data and analytics to support informed decision-making across the organization.

System Components

Data Ingestion Layer

Data Sources: The system will ingest data from various sources, including:

Trading platforms (e.g., Bloomberg, Thomson Reuters)

Market data feeds (e.g., Quandl, Alpha Vantage)

Internal data (e.g., customer data, transactional data)

Data Processing Layer: The system will process and transform the ingested data into a standardized format for analysis and reporting.

Data Storage Layer

Relational Database: A relational database (e.g., PostgreSQL, MySQL) will store historical trading data, risk metrics, and other relevant information.

NoSQL Database: A NoSQL database (e.g., MongoDB, Cassandra) will store real-time data and analytics for high-volume, high-velocity trading activities.

Data Analytics Layer

Data Warehousing: A data warehousing platform (e.g., Amazon Redshift, Google BigQuery) will store and manage the processed data for analysis and reporting.

Data Mining and Machine Learning: A data mining and machine learning platform (e.g., Apache Spark, TensorFlow) will enable predictive analytics and risk modeling.

Business Intelligence Layer

Reporting and Dashboards: A reporting and dashboard platform (e.g., Tableau, Power BI) will provide real-time and historical data visualizations for stakeholders.

Alerting and Notification: A notification system (e.g., PagerDuty, Slack) will alert stakeholders to potential trading and risk issues.

Security and Access Control

Authentication and Authorization: A secure authentication and authorization system (e.g., OAuth, SAML) will ensure that only authorized users can access the system.

Data Encryption: Data will be encrypted at rest and in transit using industry-standard encryption protocols (e.g., SSL/TLS, AES).

Scalability and High Availability

Cloud Infrastructure: The system will be deployed on a cloud infrastructure (e.g., AWS, Azure, Google Cloud) to ensure scalability and high availability.

Load Balancing and Auto Scaling: Load balancing and auto-scaling will ensure that the system can handle increased traffic and demand.

System Architecture Diagram

Here is a high-level architecture diagram of the system:

+---------------+

| Data Ingestion |

+---------------+

| (Bloomberg, |

| Thomson Reuters, |

| Quandl, Alpha |

| Vantage) |

+---------------+

| Data Processing |

| (Relational DB, |

| NoSQL DB) |

+---------------+

| Data Analytics |

| (Data Warehousing, |

| Data Mining, |

| Machine Learning) |

+---------------+

| Business Intelligence |

| (Reporting, Dashboards, |

| Alerting, Notification) |

+---------------+

| Security and Access |

| Control (Authentication, |

| Authorization, Encryption) |

+---------------+

| Cloud Infrastructure |

| (AWS, Azure, Google |

| Cloud) |

+---------------+System Components and Interfaces

API Gateway: A RESTful API gateway will provide a single entry point for clients to interact with the system.

WebSockets: WebSockets will enable real-time communication between clients and the system for trading and risk management activities.

WebSockets Server: A WebSocket server will handle the communication between clients and the system.

WebSockets Client: A WebSocket client will be used by traders and risk managers to interact with the system.

WebSockets Client Library: A WebSocket client library will be used to interact with the system.

System Components and Interfaces (continued)

Trading Platform: The trading platform will provide real-time data feeds and trading activity data to the system.

Risk Management Platform: The risk management platform will provide risk metrics and analytics data to the system.

Data Warehouse: The data warehouse will provide historical data and analytics for analysis and reporting.

Data Mining and Machine Learning: The data mining and machine learning platform will enable predictive analytics and risk modeling.

Reporting and Dashboards: The reporting and dashboard platform will provide real-time and historical data visualizations for stakeholders.

System Security and Access Control

- Authentication: OAuth and SAML will be used for authentication and authorization.

Input 2

Integrate market data feeds, trading platforms, and risk databases to capture real-time market events and trading activitiesResponse 2

Here is an updated architecture diagram with the integration of market data feeds, trading platforms, and risk databases:

+---------------+

| Market Data |

+---------------+

| (Quandl, Alpha |

| Vantage, Bloomberg, |

| Thomson Reuters) |

+---------------+

| Feed Sources |

| (API, Webhooks) |

+---------------+

| Data Ingestion |

| (Relational DB, |

| NoSQL DB) |

+---------------+

| Data Processing |

| (Data Warehousing, |

| Data Mining, |

| Machine Learning) |

+---------------+

| Data Analytics |

| (Reporting, Dashboards, |

| Alerting, Notification) |

+---------------+

| Trading Platform |

| (API, Webhooks) |

+---------------+

| Trading Activity |

| (Real-time data) |

+---------------+

| Risk Database |

| (Relational DB, |

| NoSQL DB) |

+---------------+

| Risk Metrics |

| (Historical data) |

+---------------+

| Risk Modeling |

| (Machine Learning) |

+---------------+

| Security and Access |

| Control (Authentication, |

| Authorization, Encryption) |

+---------------+

| Cloud Infrastructure |

| (AWS, Azure, Google |

| Cloud) |

+---------------+Market Data Feeds

Quandl: Quandl provides real-time financial data, including stock prices, trading volumes, and other market data.

Alpha Vantage: Alpha Vantage provides real-time and historical stock market data, including prices, volumes, and other market data.

Bloomberg: Bloomberg provides real-time and historical financial data, including stock prices, trading volumes, and other market data.

Thomson Reuters: Thomson Reuters provides real-time and historical financial data, including stock prices, trading volumes, and other market data.

Trading Platforms

API: The trading platform provides a RESTful API for trading activity data, including real-time and historical data.

Webhooks: The trading platform provides webhooks for real-time data updates, including trading activity data.

Risk Databases

Relational Database: A relational database (e.g., PostgreSQL, MySQL) stores historical risk metrics and risk models.

NoSQL Database: A NoSQL database (e.g., MongoDB, Cassandra) stores real-time risk metrics and risk models.

Data Processing

Data Warehousing: A data warehousing platform (e.g., Amazon Redshift, Google BigQuery) stores and manages the processed data for analysis and reporting.

Data Mining and Machine Learning: A data mining and machine learning platform (e.g., Apache Spark, TensorFlow) enables predictive analytics and risk modeling.

Data Analytics

Reporting and Dashboards: A reporting and dashboard platform (e.g., Tableau, Power BI) provides real-time and historical data visualizations for stakeholders.

Alerting and Notification: A notification system (e.g., PagerDuty, Slack) alerts stakeholders to potential trading and risk issues.

Security and Access Control

Authentication: OAuth and SAML will be used for authentication and authorization.

Access Control: Access control will be implemented using role-based access control (RBAC) and attribute-based access control (ABAC) to ensure that only authorized users can access the system.

Cloud Infrastructure

AWS: AWS will be used as the cloud infrastructure for the system.

Azure: Azure will be used as a secondary cloud infrastructure for scaling and managing the system.

Google Cloud: Google Cloud will be used as a third cloud infrastructure for scaling and managing the system.

System Components and Interfaces

API Gateway: A RESTful API gateway will provide a single entry point for clients to interact with the system.

WebSockets: WebSockets will enable real-time communication between clients and the system for trading and risk management activities.

WebSockets Client: A WebSocket client will be used by traders and risk managers to interact with the system.

WebSockets Client Library: A WebSocket client library will be used to interact with the system.

Data Ingestion Layer: The data ingestion layer will ingest market data feeds, trading platforms, and risk databases into the system.

Data Processing Layer: The data processing layer will process the ingested data and transform it into a standardized format for analysis and reporting.

Data Analytics Layer: The data analytics layer will analyze the processed data and provide insights and recommendations to stakeholders.

Business Intelligence Layer: The business intelligence layer will provide real-time and historical data visualizations for stakeholders.

Input 3

Include complex data models to represent financial instruments, trading positions, market data, risk factors, and regulatory metricsResponse 3

Here is an updated architecture diagram with complex data models to represent financial instruments, trading positions, market data, risk factors, and regulatory metrics:

+---------------+

| Financial Instruments |

+---------------+

| (Stocks, Bonds, |

| Options, Futures) |

+---------------+

| Instrument Types |

| (Equity, |

| Debt, Commodity) |

+---------------+

| Instrument Attributes |

| (Price, |

| Volume, |

| Open, Close, |

| High, Low) |

+---------------+

| Trading Positions |

| (Buy/Sell, |

| Long/Short) |

+---------------+

| Position Attributes |

| (Quantity, |

| Price, |

| Time, |

| Expiration) |

+---------------+

| Market Data |

| (Open, |

| High, Low, |

| Close, |

| Volume) |

+---------------+

| Market Data Sources |

| (API, |

| Webhooks) |

+---------------+

| Market Data Models |

| (Historical, |

| Real-time) |

+---------------+

| Risk Factors |

| (Volatility, |

| Leverage, |

| Liquidity) |

+---------------+

| Risk Factor Attributes |

| (Value, |

| Risk, |

| Sensitivity) |

+---------------+

| Regulatory Metrics |

| (Compliance, |

| Margin, |

| Liquidity) |

+---------------+

| Regulatory Models |

| (Regulatory |

| Frameworks, |

| Compliance) |

+---------------+

| Compliance Rules |

| (Regulatory |

| Requirements) |

+---------------+

| Compliance Enforcement |

| (Audit, |

| Testing) |

+---------------+

| Data Ingestion |

| (API, |

| Webhooks) |

+---------------+

| Data Processing |

| (Data Warehousing, |

| Data Mining, |

| Machine Learning) |

+---------------+

| Data Analytics |

| (Reporting, |

| Dashboards) |

+---------------+

| Business Intelligence |

| (Reporting, |

| Dashboards) |

+---------------+

| Security and Access |

| Control (Authentication, |

| Authorization, |

| Encryption) |

+---------------+

| Cloud Infrastructure |

| (AWS, Azure, |

| Google Cloud) |

+---------------+Financial Instruments

Stocks: Represent financial instruments with attributes such as:

Stock symbol

Name

Exchange

Industry

Sector

Market capitalization

Trading volume

Price

Open, High, Low, Close

Bonds: Represent financial instruments with attributes such as:

Bond symbol

Name

Industry

Sector

Market capitalization

Trading volume

Price

Open, High, Low, Close

Options: Represent financial instruments with attributes such as:

Option symbol

Name

Industry

Sector

Market capitalization

Trading volume

Price

Expiration date

Futures: Represent financial instruments with attributes such as:

Futures symbol

Name

Industry

Sector

Market capitalization

Trading volume

Price

Open, High, Low, Close

Trading Positions

Buy/Sell: Represent trading positions with attributes such as:

Instrument type

Quantity

Price

Time

Expiration

Long/Short: Represent trading positions with attributes such as:

Instrument type

Quantity

Price

Time

Expiration

Market Data

Open, High, Low, Close: Represent market data with attributes such as:

Time

Instrument symbol

Price

Open, High, Low, Close

Volume: Represent market data with attributes such as:

Time

Instrument symbol

Price

Volume

Risk Factors

Volatility: Represent risk factors with attributes such as:

Value

Risk

Sensitivity

Leverage: Represent risk factors with attributes such as:

Value

Risk

Sensitivity

Liquidity:

Retail industry

Scenario

An e-commerce platform anticipates a significant increase in orders during the upcoming holiday season. Their current data infrastructure struggles to handle the expected surge in data volume, velocity, and variety. Therefore, the data engineers of the firm are proposing to enhance their data infrastructure. Discussions are underway in the aspects of scalable storage, better processing capabilities and real time analytics.

Input 1

To use generative AI for suggestive inputs on this problem statement, you can use the following prompt.

How should an E-commerce platform enhance their data infrastructure, such that they are able to handle increase in traffic. Suggest the improvements in terms of:

1. scalable storage,

2. better processing capabilities and

3. real-time analytics.Response 1

To enhance the data infrastructure of an e-commerce platform to handle an increase in traffic, the following improvements can be suggested:

1. Scalable Storage:

Cloud-based Storage: Consider using a cloud-based storage solution such as Amazon S3, Google Cloud Storage, or Microsoft Azure Blob Storage. These services provide scalable storage with high availability, durability, and performance.

Object Storage: Use object storage to store large files, such as product images, videos, and documents. This allows for efficient storage and retrieval of large files without the need for complex indexing and querying.

Distributed Storage: Implement a distributed storage system, such as Amazon S3 or Google Cloud Storage, to store data across multiple servers, ensuring that data is not lost in case of a single server failure.

2. Better Processing Capabilities:

Distributed Computing: Use a distributed computing framework, such as Apache Spark or Hadoop, to process large amounts of data in parallel, reducing processing time and improving overall performance.

GPU Acceleration: Leverage the power of Graphics Processing Units (GPUs) to accelerate data processing, machine learning, and other computationally intensive tasks.

Cloud-based Infrastructure: Utilize cloud-based infrastructure, such as Amazon EC2 or Google Cloud Compute Engine, to provide scalable and on-demand computing resources.

3. Real-time Analytics:

Real-time Data Ingestion: Implement a real-time data ingestion system, such as Apache Kafka or Apache Flink, to collect and process data from various sources in real-time.

Data Processing Pipelines: Create data processing pipelines to transform, aggregate, and analyze data in real-time, enabling fast and efficient data analysis.

Real-time Analytics Tools: Use real-time analytics tools, such as Apache Spark or Apache Beam, to analyze data in real-time and provide insights in real-time.

Additional Recommendations:

Load Balancing: Implement load balancing to distribute traffic across multiple servers, ensuring that no single server becomes a bottleneck.

Caching: Use caching mechanisms, such as Redis or Memcached, to reduce the load on the database and improve response times.

Monitoring and Alerting: Implement monitoring and alerting tools, such as Prometheus or New Relic, to detect and respond to performance issues in real-time.

Security: Ensure that the data infrastructure is secure, with measures such as encryption, access controls, and regular security audits to protect sensitive data.

By implementing these improvements, an e-commerce platform can handle an increase in traffic, improve scalability, and provide real-time analytics to support business growth and customer satisfaction.

Healthcare industry

Scenario

A healthcare provider wants to establish a central data repository for research purposes. This data lake would hold patient data, medical records, and research studies. The data engineers of the firm are discussing implementation of big data management tools, data ingestion and transformation capabilities and data security and compliance guidelines.

Input 1

To use generative AI for suggestive inputs on this problem statement, you can use the following prompt:

How should a healthcare company set up a data lake infrastructure that is capable of the following.

1. Big data management

2. data ingestion from various sources

3. data transformation

4. data security and compliance to regulatory guidelinesResponse 1

To set up a data lake infrastructure that can handle the requirements of big data management, data ingestion from various sources, data transformation, and data security and compliance to regulatory guidelines, a healthcare company should consider the following steps:

Big Data Management:

Data Ingestion:

Use a scalable and flexible data ingestion platform, such as Apache Kafka, Apache Flume, or Amazon Kinesis, to handle large volumes of data from various sources.

Implement a data pipeline to collect, process, and transform data from different sources, such as EHRs, claims, and external data sources.

Data Storage:

Use a scalable and durable data storage solution, such as Amazon S3, Google Cloud Storage, or Azure Blob Storage, to store the ingested data.

Implement data compression and caching to reduce storage costs and improve data retrieval efficiency.

Data Management:

Use a data warehousing platform, such as Amazon Redshift, Google BigQuery, or Azure Synapse Analytics, to store and analyze the ingested data.

Implement data governance and metadata management to ensure data quality, accuracy, and compliance with regulatory guidelines.

Data Ingestion from Various Sources:

Data Integration:

Use APIs, message queues, or file-based integration tools, such as Apache NiFi, Apache Airflow, or AWS Glue, to integrate data from various sources.

Implement data mapping and transformation rules to convert data formats and extract relevant information.

Data Transformation:

Use data transformation tools, such as Apache Spark, Apache Flink, or Apache Beam, to transform data in real-time or batch mode.

Implement data quality checks and validation to ensure data accuracy and consistency.

Data Quality and Validation:

- Implement data quality checks and validation using data validation libraries, such as Apache Avro, Apache Parquet, or Apache CSV, to ensure data accuracy and consistency.

Data Security and Compliance:

Data Encryption:

Use encryption to protect data at rest and in transit, such as SSL/TLS, AES, or KMS (Key Management Service).

Implement data access controls, such as role-based access control (RBAC) and attribute-based access control (ABAC).

Data Access Controls:

Implement data access controls, such as authentication and authorization, to ensure only authorized personnel can access the data.

Use data masking and anonymization techniques to protect sensitive data.

Compliance:

Implement data governance policies and procedures to ensure compliance with regulatory guidelines, such as HIPAA, GDPR, or HIPAA-HITECH.

Use data mapping and transformation tools to ensure data is compliant with regulatory requirements.

Additional Considerations:

Scalability:

- Design the infrastructure to scale horizontally, using load balancers, auto-scaling, and caching to handle large volumes of data.

Performance:

- Optimize data processing and storage performance using data compression, caching, and indexing.

Monitoring and Maintenance:

Implement monitoring tools, such as Prometheus, Grafana, or New Relic, to monitor data pipeline performance and detect issues.

Regularly maintain and update the infrastructure to ensure it remains secure, scalable, and efficient.

By following these steps, a healthcare company can set up a robust data lake infrastructure that can handle the requirements of big data management, data ingestion from various sources, data transformation, and data security and compliance to regulatory guidelines.

Finance industry

Scenario

A financial services company wants to implement machine learning models to detect fraudulent transactions in real time. This requires building and maintaining a robust data infrastructure to support the models. This requires data engineers to set up high performance computing machinery, feature engineering pipelines, predictive modeling, and model deployment and monitoring.

Input 1

To use generative AI for suggestive inputs on this problem statement, you can use the following prompt.

How should a financial firm set up their infrastructure if they want to detect fraudulent transactions in real time? Suggest specifics in terms of:

1. Computing machinery

2. Feature engineering pipeline

3. Predictive modeling pipeline

4. Model deployment and monitoringResponse 1

The generated solution can have different wording every time, but broadly the structure of the response will remain the same. One of the ways in which you can find the response of the GenAI systems is shared below.

To set up a financial firm to detect fraudulent transactions in real-time, a comprehensive infrastructure should be designed with the following specifics:

Computing Machinery:

High-performance computing hardware: Use powerful servers with multiple CPU cores, high-speed storage, and ample RAM to handle large datasets and complex computations.

GPU acceleration: Utilize Graphics Processing Units (GPUs) for tasks like data preprocessing, feature engineering, and model training, which are computationally intensive.

Cloud-based infrastructure: Leverage cloud services like Amazon Web Services (AWS), Google Cloud Platform (GCP), or Microsoft Azure to provide scalable, on-demand computing resources.

Data storage: Use a distributed storage solution like Amazon S3, Google Cloud Storage, or Azure Blob Storage to store large datasets.

Feature Engineering Pipeline:

Data ingestion: Set up a data ingestion pipeline to collect, transform, and preprocess data from various sources, such as databases, APIs, or file systems.

Feature engineering: Design a feature engineering pipeline to extract relevant features from the data, including:

Text features (e.g., sentiment analysis, named entity recognition)

Numerical features (e.g., transaction amounts, time intervals)

Geospatial features (e.g., location, timestamp)

Time-series features (e.g., transaction timestamps, transaction amounts)

Data preprocessing: Apply data preprocessing techniques like data normalization, feature scaling, and data augmentation to improve model performance.

Feature selection: Select relevant features that are most informative for the specific problem at hand.

Predictive Modeling Pipeline:

Machine learning algorithms: Choose suitable machine learning algorithms for real-time fraud detection, such as:

Supervised learning algorithms (e.g., logistic regression, decision trees, random forests)

Unsupervised learning algorithms (e.g., clustering, dimensionality reduction)

Model training: Train the chosen algorithms on a large dataset of labeled transactions to develop a model that can detect fraudulent transactions.

Model deployment: Deploy the trained model in a production-ready environment, using techniques like model serving, model serving with API gateways, or model serving with containerization (e.g., Docker).

Model monitoring: Continuously monitor the model’s performance, accuracy, and latency to ensure it remains effective in real-time.

Model Deployment and Monitoring:

Model serving: Use a model serving platform like AWS SageMaker, Google Cloud AI Platform, or Azure Machine Learning to deploy the trained model in a scalable and secure manner.

Model monitoring: Set up monitoring tools like Prometheus, Grafana, or New Relic to track model performance, latency, and errors in real-time.

Alerting and notification: Configure alerting and notification systems to notify the team when the model detects a suspicious transaction or when the model’s performance degrades.

Continuous integration and deployment: Implement continuous integration and continuous deployment (CI/CD) pipelines to automate the process of building, testing, and deploying the model in response to changes in the data or model performance.

Example architecture:

+---------------+

| Data Ingestion |

+---------------+

| -> Data |

| -> Feature |

| -> Preprocessing|

| -> Model |

| -> Serving |

+---------------+This architecture consists of:

Data ingestion pipeline: collects and preprocesses data from various sources.

Feature engineering pipeline: extracts relevant features from the data.

Model training pipeline: trains a machine learning model on the preprocessed data.

Model serving pipeline: deploys the trained model in a production-ready environment.

Model monitoring pipeline: monitors the model’s performance and latency in real-time.

By following this architecture, a financial firm can set up a robust infrastructure to detect fraudulent transactions in real-time, leveraging the power of machine learning and cloud computing.