pip install ultralytics

# pip install opencv-python

# If using jupyter notebook create jupyter kernel

pip install ipykernel

# register the kernel

python -m ipykernel install --user --name=venv_opencv --display-name "Py venv_opencv"

# Open notebook

jupyter notebookCar Counter Y8

We will use part of what we covered in masks, regions, line/threshold to create a car counter model.

Setup

After

- creating a folder

- a venv

- activate environment

- install the following:

We will copy the code we used earlier to detect objects from the video and start from there.

from ultralytics import YOLO

import cv2 # we will use this later

import matplotlib as plt

import math

from cv_utils import *

cap = cv2.VideoCapture("../cars.mp4") # For Video

win_name = "Car Counter"

model = YOLO("../Yolo-Weights/yolov8l.pt")

# List of Class names

classNames = ["person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck", "boat",

"traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat",

"dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella",

"handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup",

"fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli",

"carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant", "bed",

"diningtable", "toilet", "tvmonitor", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

"teddy bear", "hair drier", "toothbrush"

]

while cap.isOpened():

# Read frame from video

success, frame = cap.read()

if success: # if frame is read successfully set the results of the model on the frame

results = model(frame, stream=True)

# Insert Box Extraction section here

for r in results:

boxes = r.boxes

for box in boxes:

x1, y1, x2, y2 = box.xyxy[0]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2) # convert values to integers

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 3)

# we can also use a function from cvzone/utils.py called

# cvzone.cornerRect(img,(x1,y1,w,h))

# extract the confidence level

conf = math.ceil(box.conf[0] * 100) / 100

# extract class ID

cls = int(box.cls[0])

# display both conf & class ID on frame

putTextRect(frame, f'{conf} {classNames[cls]}', (max(0, x1), max(35, y1)), scale=0.6, thickness=1, offset=5)

cv2.imshow(win_name, frame)

if cv2.waitKey(1) == 27:

break # if user breaks with ESC key

else:

break # if end of video is reached

# Release video capture object and close display window

cap.release()

cv2.destroyAllWindows()All we have done from the previous code is to change the source file to the cars.mp4

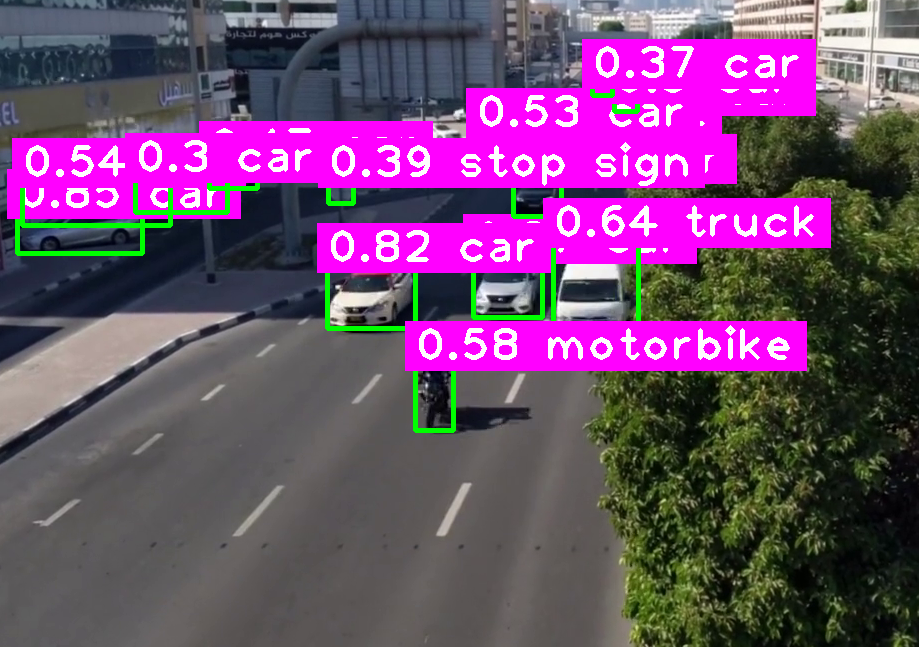

# OUTPUT

0: 384x640 1 person, 14 cars, 1 truck, 1 traffic light, 1 stop sign, 564.7ms

Speed: 8.9ms preprocess, 564.7ms inference, 42.6ms postprocess per image at shape (1, 3, 384, 640)

0: 384x640 1 person, 14 cars, 1 truck, 1 traffic light, 1 stop sign, 403.3ms

Speed: 2.2ms preprocess, 403.3ms inference, 0.9ms postprocess per image at shape (1, 3, 384, 640)

0: 384x640 1 person, 16 cars, 1 truck, 1 traffic light, 1 stop sign, 377.7ms

Speed: 1.4ms preprocess, 377.7ms inference, 0.9ms postprocess per image at shape (1, 3, 384, 640)

0: 384x640 1 person, 16 cars, 1 traffic light, 1 stop sign, 373.4ms

Speed: 2.4ms preprocess, 373.4ms inference, 1.0ms postprocess per image at shape (1, 3, 384, 640)

As you see it is detecting cars on the shoulder and not moving…. So we will have to narrow our detection region with either using a mask or regions.

Adjust Text Size

- Before we get started with the mask let’s fix some cosmetic issues by editing the line above to

# display both conf & class ID on frame - scale down the bos as it is too big

putTextRect(frame, f'{conf} {classNames[cls]}', (max(0, x1), max(35, y1)), scale=0.6, thickness=1, offset=5)

Filter Classes

Let’s say out of the long list of classes we only want to detect

- Car

- Bus

- Truck

So we need to use an if statement to exclude other classes

- we already have cls as the class id

- so we can create a wantedClass to be a list derived from the classNames list

- then we filter the detection to the wanted classes

- we can also filter out a confidence level with conf

- Let’s also change the waitkey(0) to 0 instead of 27 - 0 signifies tab bar, so we want it to stop at each frame till the user presses the tab

# extract class ID

cls = int(box.cls[0])

wantedClass = classNames[cls]

if wantedClass == "car" or wantecClass == "bus" or wantecClass == "truck" and conf >0.3:

putTextRect(frame, f'{conf} {classNames[cls]}', (max(0, x1), max(35, y1)), scale=0.6, thickness=1, offset=5)so our code becomes

from ultralytics import YOLO

import cv2 # we will use this later

import matplotlib as plt

import math

from cv_utils import *

cap = cv2.VideoCapture("../cars.mp4") # For Video

win_name = "Car Counter"

model = YOLO("../Yolo-Weights/yolov8l.pt")

# List of Class names

classNames = ["person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck", "boat",

"traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat",

"dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella",

"handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup",

"fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli",

"carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant", "bed",

"diningtable", "toilet", "tvmonitor", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

"teddy bear", "hair drier", "toothbrush"

]

while cap.isOpened():

# Read frame from video

success, frame = cap.read()

if success: # if frame is read successfully set the results of the model on the frame

results = model(frame, stream=True)

# Insert Box Extraction section here

for r in results:

boxes = r.boxes

for box in boxes:

x1, y1, x2, y2 = box.xyxy[0]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2) # convert values to integers

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 3)

# we can also use a function from cvzone/utils.py called

# cvzone.cornerRect(img,(x1,y1,w,h))

# extract the confidence level

conf = math.ceil(box.conf[0] * 100) / 100

# extract class ID

cls = int(box.cls[0])

wantedClass = classNames[cls]

# filter out unwanted classes from detection

if wantedClass == "car" or wantedClass == "bus" or wantedClass == "truck" and conf > 0.3:

# display both conf & class ID on frame - scale down the bos as it is too big

putTextRect(frame, f'{conf} {classNames[cls]}', (max(0, x1), max(35, y1)), scale=0.6, thickness=1, offset=5)

cv2.imshow(win_name, frame)

key = cv2.waitKey(0) # wait for key press

if key == ord(" "): # a space bar will display the next frame

continue

elif key == 27: # escape will exit

break

# Release video capture object and close display window

cap.release()

cv2.destroyAllWindows()

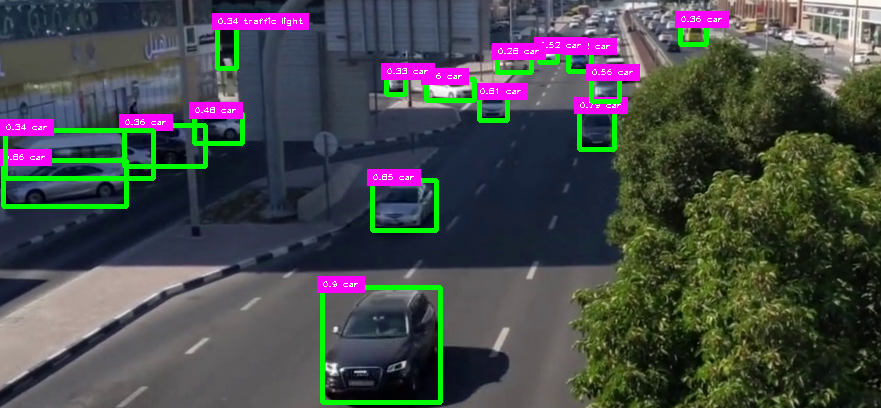

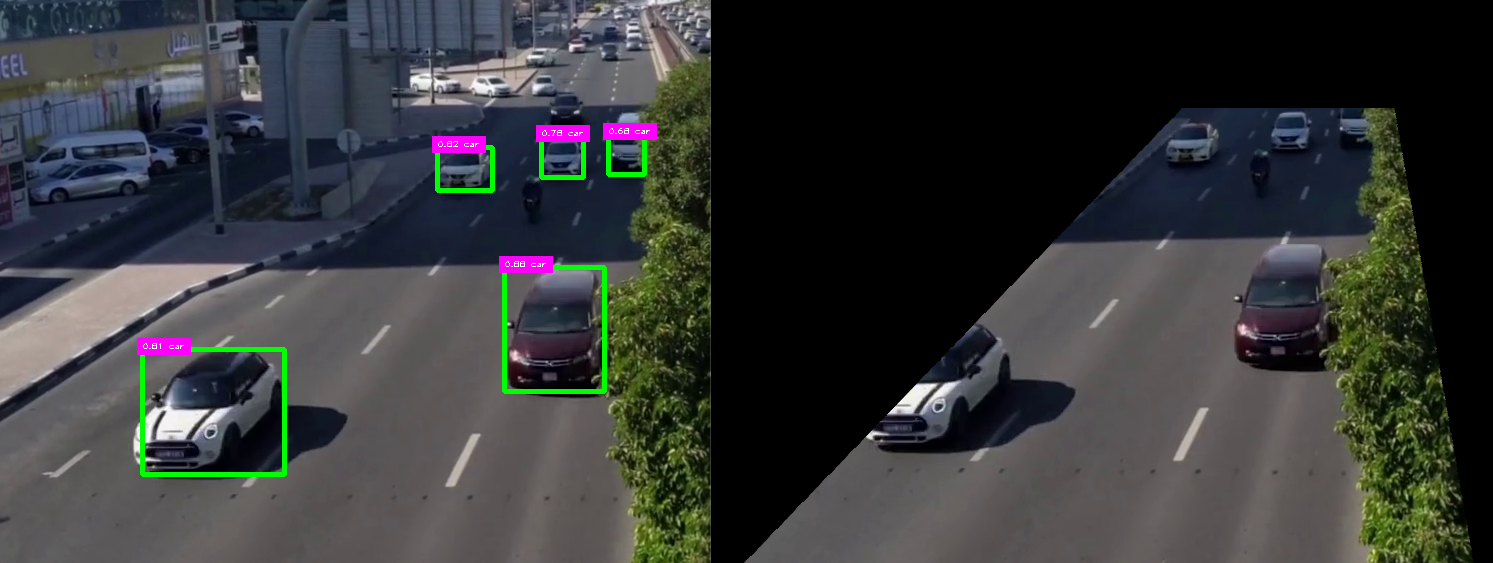

Omit Unwanted BB

- As you see above, we have unwanted classes that are still detected and not labeled, we want the model to not draw the BB for them

- So all we do is move thecv2.rectangle() line to inside the wanted if statement

- As you see below the person on the motorcycle is no longer shown with a BB

- The stop sign is no longer displayed anymore as well

- So what’s left is to mask out the unwanted areas in the image

- Now we can refer to the masking page, as I’ll just include the code in the next paragraph without explanation

if wantedClass == "car" or wantedClass == "bus" or wantedClass == "truck" and conf > 0.3:

# display both conf & class ID on frame - scale down the bos as it is too big

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 3)

putTextRect(frame, f'{conf} {classNames[cls]}', (max(0, x1), max(35, y1)), scale=0.6, thickness=1, offset=5)

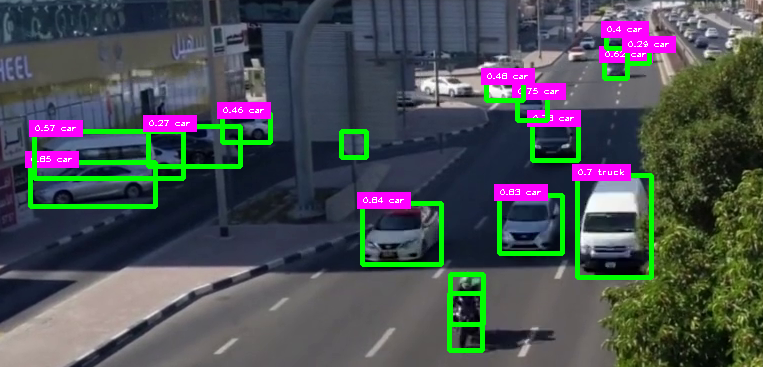

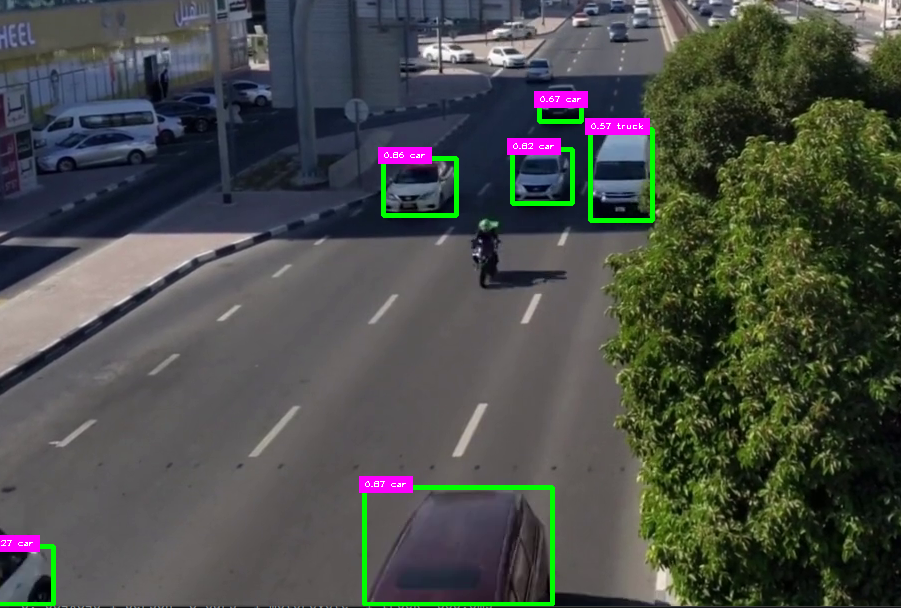

Mask

- If we just add the mask we get the image below

- You can clearly see that the cars will not be detected until they enter the desired region

- More details about masking is in the appropriate page

from ultralytics import YOLO

import cv2 # we will use this later

import matplotlib as plt

import math

from cv_utils import *

cap = cv2.VideoCapture("../cars.mp4") # For Video

mask = cv2.imread("../car_counter_mask1.png") # For mask

win_name = "Car Counter"

model = YOLO("../Yolo-Weights/yolov8l.pt")

# List of Class names

classNames = ["person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck", "boat",

"traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat",

"dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella",

"handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup",

"fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli",

"carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant", "bed",

"diningtable", "toilet", "tvmonitor", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

"teddy bear", "hair drier", "toothbrush"

]

while cap.isOpened():

success, frame = cap.read() # read frame from video

imgRegion = cv2.bitwise_and(frame, mask) #place mask over frame

if success: # if frame is read successfully set the results of the model on the frame

# results = model(frame, stream=True)

results = model(imgRegion, stream=True) # now we send the masked region to the model instead of the frame

# Insert Box Extraction section here

for r in results:

boxes = r.boxes

for box in boxes:

x1, y1, x2, y2 = box.xyxy[0]

x1, y1, x2, y2 = int(x1), int(y1), int(x2), int(y2) # convert values to integers

#cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 3)

# we can also use a function from cvzone/utils.py called

# cvzone.cornerRect(img,(x1,y1,w,h))

# extract the confidence level

conf = math.ceil(box.conf[0] * 100) / 100

# extract class ID

cls = int(box.cls[0])

wantedClass = classNames[cls]

# filter out unwanted classes from detection

if wantedClass == "car" or wantedClass == "bus" or wantedClass == "truck" and conf > 0.3:

# display both conf & class ID on frame - scale down the bos as it is too big

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 3)

putTextRect(frame, f'{conf} {classNames[cls]}', (max(0, x1), max(35, y1)), scale=0.6, thickness=1, offset=5)

cv2.imshow(win_name, frame) # display frame

cv2.imshow("MaskedRegion", imgRegion) # display mask over frame

key = cv2.waitKey(0) # wait for key press

if key == ord(" "): # a space bar will display the next frame

continue

elif key == 27: # escape will exit

break

# Release video capture object and close display window

cap.release()

cv2.destroyAllWindows()

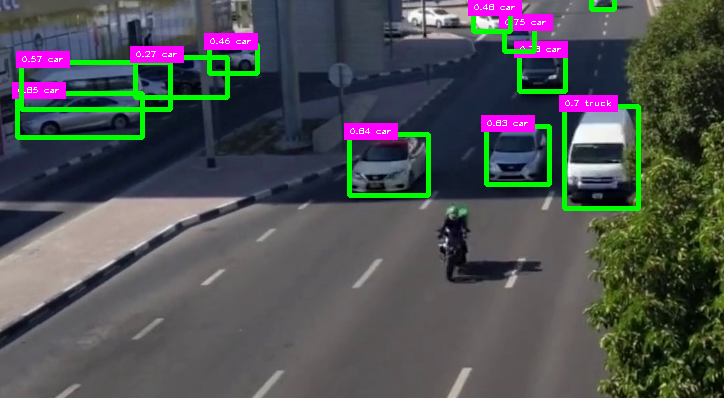

- If we comment out the line below we end up with

cv2.imshow("MaskedRegion", imgRegion) # display mask over frame

Count Cars

- In order to count cars we need to establish an area where once the object passes over it the model will accrue another object to its total.

- Once again refer to the Count Objects page for more details