import os

import cv2

import sys

from zipfile import ZipFile

from urllib.request import urlretrieve

# ========================-Downloading Assets-========================

def download_and_unzip(url, save_path):

print(f"Downloading and extracting assests....", end="")

# Downloading zip file using urllib package.

urlretrieve(url, save_path)

try:

# Extracting zip file using the zipfile package.

with ZipFile(save_path) as z:

# Extract ZIP file contents in the same directory.

z.extractall(os.path.split(save_path)[0])

print("Done")

except Exception as e:

print("\nInvalid file.", e)

URL = r"https://www.dropbox.com/s/efitgt363ada95a/opencv_bootcamp_assets_12.zip?dl=1"

asset_zip_path = os.path.join(os.getcwd(), f"opencv_bootcamp_assets_12.zip")

# Download if assest ZIP does not exists.

if not os.path.exists(asset_zip_path):

download_and_unzip(URL, asset_zip_path)

# ====================================================================

# Set the device index for the camera as we have in prior pages

s = 0

if len(sys.argv) > 1:

s = sys.argv[1]

# Create a video capture object & set a window output

source = cv2.VideoCapture(s)

win_name = "Camera Output

cv2.namedWindow(win_name, cv2.WINDOW_NORMAL)

# Read-in pretrained model from Caffe one of the deep learning frameworks (tensorflow, pytorch are others)

# readNetFromCaffe takes two arguments: 1: contains the network information

# 2: Caffe modelfile that contains the weights the model was trained at

net = cv2.dnn.readNetFromCaffe("deploy.prototxt", "res10_300x300_ssd_iter_140000_fp16.caffemodel")

# once we read it above it returns an instance of the network which we will use later to produce inferences

# Model parameters how the model was trained so we have to stick to what was done before.

in_width = 300

in_height = 300

mean = [104, 117, 123] # of color channel

conf_threshold = 0.7 # we set

# Here we have a while loop to read the frames one by one as produced by the camera

while cv2.waitKey(1) != 27:

has_frame, frame = source.read()

if not has_frame:

break

frame = cv2.flip(frame, 1) # flip it to match how we are looking on the display

frame_height = frame.shape[0] # we dynamically retrieve the size of video frame

frame_width = frame.shape[1]

# Create a 4D blob from a frame. Here we do some preprocessing on the image frame

# we are putting the input image in a shape to match the model

# arg1: input the frame. arg2: scale factor to match YAML file

# arg3: height and width, mean value subtracted from images

# swap set to false because Caffe and OpenCV use the same convention

# crop: if we want to resize/crop the input image, the fact that it is set to crop #=false means we are going to resize to 300x300

blob = cv2.dnn.blobFromImage(frame, 1.0, (in_width, in_height), mean, swapRB=False, crop=False)

# Run a model. Pass blob to Input

net.setInput(blob)

detections = net.forward() # performs inference on our network image passed to it

# for all the detections created we loop and calculate the confidence for each detection

for i in range(detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > conf_threshold:

x_left_bottom = int(detections[0, 0, i, 3] * frame_width)

y_left_bottom = int(detections[0, 0, i, 4] * frame_height)

x_right_top = int(detections[0, 0, i, 5] * frame_width)

y_right_top = int(detections[0, 0, i, 6] * frame_height)

# build a box around the detection

cv2.rectangle(frame, (x_left_bottom, y_left_bottom), (x_right_top, y_right_top), (0, 255, 0))

# print the confidence level on the image

label = "Confidence: %.4f" % confidence

label_size, base_line = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)

# Displays the information on the image

cv2.rectangle(

frame,

(x_left_bottom, y_left_bottom - label_size[1]),

(x_left_bottom + label_size[0], y_left_bottom + base_line),

(255, 255, 255),

cv2.FILLED,

)

cv2.putText(frame, label, (x_left_bottom, y_left_bottom), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 0))

# Calculate the time it took for the detection and convert to milliseconds & annotate the frame, then display with imshow

t, _ = net.getPerfProfile()

label = "Inference time: %.2f ms" % (t * 1000.0 / cv2.getTickFrequency())

cv2.putText(frame, label, (0, 15), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0))

cv2.imshow(win_name, frame)

source.release()

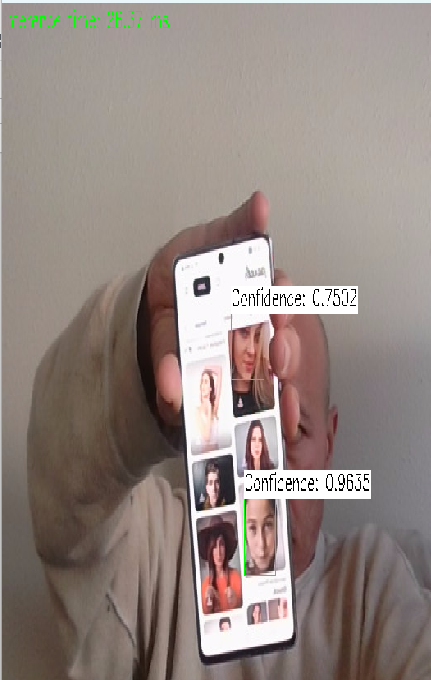

cv2.destroyWindow(win_name)Face Detection - CAFFE

- We will use a pre-trained neural network to detect faces

- We cannot use OpenCV to train a neural network but we can use it to produce inference

- Even though we are downloading the weights file from the assets, we can download them from the internet (download_models.py) found at opencv/opencv on Github

- That page also contains script on how to use opencv to download various models

- That script also references a YAML.yaml file which references the model used, the url to download the weights file, as well as how the model was trained

As you see above it even picks up face images from the phone