Here is a proposed data architecture for an online retail company that meets the specified requirements:

Data Architecture Overview

The data architecture will consist of the following components:

Data Warehouse

Data Store

Data Access Layer

Data Security and Access Control

Data Quality and Governance

Data Warehouse

Purpose: To store and manage large amounts of data in a centralized location, providing a single source of truth for all business data.

Components:

Data Store: A relational database management system (RDBMS) that stores data in a structured and normalized manner.

Data Catalog: A metadata repository that provides information about the data, including data definitions, data relationships, and data quality metrics.

Data Quality Engine: A process that ensures data accuracy, completeness, and consistency.

Data Store

Purpose: To store data in a relational database management system (RDBMS) that is optimized for large-scale data storage and retrieval.

Components:

Database: A relational database management system (RDBMS) such as MySQL, PostgreSQL, or Oracle.

Data Storage: A database schema that defines the structure of the data, including tables, columns, and relationships between tables.

Data Schema: A database schema that defines the data structure and relationships between tables.

Data Access Layer

Purpose: To provide a layer of abstraction between the data store and the data access layer, allowing for secure and efficient data retrieval and manipulation.

Components:

Data Access Service: A web-based interface that provides a simple and intuitive way for employees to access the data store.

Data Access API: A RESTful API that provides a standardized interface for data access and manipulation.

Data Security: A layer that ensures data access is secure and authenticated.

Data Security and Access Control

Purpose: To ensure that only authorized employees can access the data store and data access layer.

Components:

Authentication: A system that verifies user identity and authorizes access to the data store and data access layer.

Authorization: A system that controls access to specific data and data access methods.

Data Encryption: A layer that encrypts data in transit and at rest to protect against unauthorized access.

Data Quality and Governance

Purpose: To ensure the accuracy, completeness, and consistency of the data in the data store.

Components:

Data Quality Engine: A process that ensures data accuracy, completeness, and consistency.

Data Validation: A system that validates data against predefined rules and constraints.

Data Auditing: A system that tracks changes to the data and provides audit trails.

Data Flow

Data Ingestion: Data is ingested from various sources, such as databases, files, and APIs.

Data Processing: Data is processed and transformed into a standardized format for storage in the data store.

Data Storage: Data is stored in the data store.

Data Access: Data is accessed through the data access layer.

Data Retrieval: Data is retrieved from the data store and processed for analysis or reporting.

Data Analysis: Data is analyzed and insights are generated.

Data Reporting: Data is presented to stakeholders through reports and dashboards.

Data Access Layer

GET /data: Returns a list of all data in the data store.

GET /data/{id}: Returns a single record of data with the specified ID.

POST /data: Creates a new record of data.

PUT /data/{id}: Updates an existing record of data.

DELETE /data/{id}: Deletes a record of data.

Data Security

Authentication: Verifies user identity and authorizes access to the data store.

Authorization: Controls access to specific data and data access methods.

Data Encryption: Encrypts data in transit and at rest to protect against unauthorized access.

Data Quality

Data Validation: Validates data against predefined rules and constraints.

Data Auditing: Tracks changes to the data and provides audit trails.

This data architecture provides a centralized and secure way to store and manage data for the online retail company, while meeting the specified requirements.DW Schema & ERD

Gen AI - Data Workflow

Objectives

You are a data engineer who has been hired by a European online retail company to design a data workflow for their operations. The company requires that you perform all of the following tasks:

Propose a detailed data architecture for the whole data process.

The client does not want cloud-based processing resources. The company wants an SQL-based central data repository that their employees from multiple countries can access for their use.

Propose a detailed data warehouse schema and design its entity requirements document (ERD).

a. The client wants customer information, seller information, inventory information, and transaction invoice information, to be recorded.

- The client wants the final data prepared such that the final record of sales invoices contains the headers

InvoiceNo,StockCode,Description,Quantity,InvoiceDate,UnitPrice,CustomerID, andCountry.

- The client wants the final data prepared such that the final record of sales invoices contains the headers

Recommend the infrastructure requirements for the proposed data architecture.

Create an ETL pipeline to clean, process, and load the data to an SQL server for analysis. Test the pipeline on a sample database.

a. The recorded data is available at a provided URL.

- The InvoiceNo starting with the character

Cis a credit entry, and you should remove these entries from the records before starting your analysis.

- The StockCode values of

C2,D,M, andPOSTcorrespond to Carraige, Discount, Manual, and Postage entries, none of which are required for analysis.

- The CustomerID is missing from a few entries. Remove these entries from the data before analysis.

- Load the final transaction record to an SQLite3 database

Invoice_Recordsunder the tablePurchase_transactions.

- The InvoiceNo starting with the character

Query the SQL database to access data from the server.

You are required to extract the data of a selected country from the database table created in the previous step.

Implement data analysis and data mining strategies on the final data.

Then, implement Apriori algorithm and perform association rule mining on the data for the specified country.

Data Set

We will use the Online Retail data set available in the UCI ML library, available publically under the CC BY 4.0 license.

Data Architecture

To propose a data architecture for the retail system, you will set up the following guidelines based on the client specifications.

The client does not want to go for cloud based processing resources. They want an SQL-based central data repository that their employees from multiple countries can access for their use.

You can use the GenAI model to propose a data architecture. Try to create a prompt that will give you the expected response.

Prompt 1

Create a data architecture, clearly defining each step of the process, for an online retail company. Please take note of the following specifications.

- The company does not want to use cloud platforms for storage or processing.

- They want an SQL-based central data repository that can be accessed by any employee as per requirements.

Response 1

- Define data sources: Identify all the sources of data within the company, such as sales transactions, customer information, inventory data, and so on.

- Data collection: Set up processes to collect data from the various sources and store it in the on-premises SQL server. This can involve setting up data pipelines to automate the extraction and loading of data into the central repository.

- Data transformation: Clean and transform the data to ensure consistency and quality. This may involve data cleansing, normalization, and standardization to make the data usable for analysis.

- Data modeling: Design the database schema and data model to organize the data in a structured and efficient manner. Define relationships between different data entities to facilitate data retrieval and analysis.

- Data access: Implement access controls and permissions to regulate who can access and modify the data in the central repository. This ensures data security and compliance with privacy regulations.

- Data analysis: Provide tools and interfaces for employees to access and analyze the data stored in the central repository. This can include dashboards, reporting tools, and data visualization techniques to make data insights easily accessible.

- Data backup and recovery: Establish regular data backup procedures to prevent data loss in case of system failures or disasters. Implement disaster recovery plans to ensure quick recovery of data in case of emergencies.

- Data governance: Implement policies and procedures to govern the use and management of data within the company. This includes data quality standards, data retention policies, and data lifecycle management practices.

By following these steps, you can create a comprehensive data architecture for the online retail company that meets their specifications of not using cloud platforms and having an SQL-based central data repository accessible to all employees.

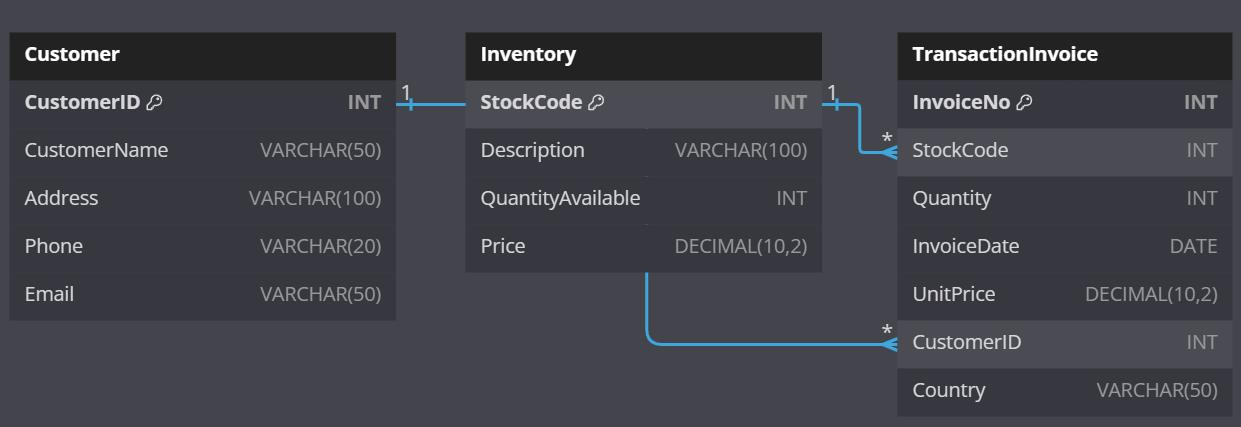

First, you need to set up the data warehouse schema and its ERD diagram. For that task, you need clearly defined requirements from the client as to the kind of data they want recorded. Assume that you received the following information from the client.

- The client wants to record customer information, seller information, inventory information, and transaction invoice information.

- The client wants the final data prepared such that the final record of sales invoices displayes the headers

InvoiceNo,StockCode,Description,Quantity,InvoiceDate,UnitPrice,CustomerIDandCountry.

To define the schema of the data warehouse that meets these requirements, you can now write a prompt on the GenAI platform.

Prompt 2

Propose a data schema for an online retail store that meets the following guidelines.

- Records Customer information, inventory information, and transaction invoice information.

- Prepare the final data such that the final record of sales invoices has the headers

InvoiceNo,StockCode,Description,Quantity,InvoiceDate,UnitPrice,CustomerIDandCountry.

Response 2

To create a data schema for an online retail store that includes customer information, seller information, inventory information, and transaction invoice information, you can design a relational database with the following tables:

- Customer table:

- CustomerID (Primary key)

- CustomerName

- Address

- Phone

- Inventory table:

- StockCode (Primary key)

- Description

- QuantityAvailable

- Price

- Transaction Invoice table:

- InvoiceNo (Primary key)

- StockCode (Foreign key referencing Inventory table)

- Quantity

- InvoiceDate

- UnitPrice

- CustomerID (Foreign key referencing Customer table)

- Country

By setting up this data schema, you can store and manage customer information, inventory details, and transaction invoice data in a structured and organized manner. The final record of sales invoices can be retrieved by querying the Transaction Invoice table with the specified headers: InvoiceNo, StockCode, Description, Quantity, InvoiceDate, UnitPrice, CustomerID, and Country.

Generate SQL Code

You can further use the generative AI platform to generate the SQL codes for creating this warehouse, and use this code to create the ERD on the DbDiagram interface, as explained earlier in the course.

Prompt 3

Generate the SQL code to create this data warehouse

Response 3

-- Create Customer Table

CREATE TABLE Customer (

CustomerID INT PRIMARY KEY,

CustomerName VARCHAR(50),

Address VARCHAR(100),

Phone VARCHAR(20),

Email VARCHAR(50)

);

-- Create Inventory Table

CREATE TABLE Inventory (

StockCode INT PRIMARY KEY,

Description VARCHAR(100),

QuantityAvailable INT,

Price DECIMAL(10, 2)

);

-- Create Transaction Invoice Table

CREATE TABLE TransactionInvoice (

InvoiceNo INT PRIMARY KEY,

StockCode INT,

Quantity INT,

InvoiceDate DATE,

UnitPrice DECIMAL(10, 2),

CustomerID INT,

Country VARCHAR(50),

FOREIGN KEY (StockCode) REFERENCES Inventory(StockCode),

FOREIGN KEY (CustomerID) REFERENCES Customer(CustomerID)

);

Infrastructure Requirements

You now need to define the infrastructure requirements for such a setup. You can make use of the same chat for making this prompt as the GenAI will draw context from the previous responses and give you a tailored response.

You will frame a prompt that can use the context from the previous steps and create the infrastructure requirements for your design.

Prompt 4

Propose a set of infrastructure requirements for different stages of the data workflow based on the data architecture discussed her.

Response 4

Based on the data architecture discussed for the online retail company, the infrastructure requirements for different stages of the data workflow can be outlined as follows:

Data collection:

- Hardware: Servers or devices to collect data from various sources and transfer the data to the central SQL server.

- Software: Data collection tools and scripts to automate the extraction and load the data into the central repository.

- Networking: Reliable network connections to ensure the seamless data transfer between sources and the central repository.

Data transformation:

- Hardware: Sufficient processing power and memory to handle data transformation tasks efficiently.

- Software: Data transformation tools and ETL (Extract, Transform, Load) processes to clean, normalize, and standardize the data.

- Networking: Fast and stable network connections to support data transformation processes.

Data modeling:

- Hardware: Hardware resources to support database schema design and data modeling activities.

- Software: Database management software to define relationships between data entities and optimize data storage.

- Networking: Network connectivity to access and manage the database schema.

Data access:

Hardware: Devices and systems to access the central data repository for analysis and reporting.

Software: Data visualization tools, reporting software, and query interfaces for employees to access and analyze data.

Networking: Secure network connections to ensure data access controls and permissions are enforced.

Data backup and recovery:

- Hardware: Backup storage devices or systems to store copies of data for disaster recovery purposes.

- Software: Backup and recovery software to automate data backup processes and ensure data integrity.

- Networking: Network connections to facilitate data backup and recovery operations.

Data security:

- Hardware: Security appliances or devices to protect the data warehouse from unauthorized access.

- Software: Encryption software, access control mechanisms, and data security tools to secure sensitive data.

- Networking: Secure network configurations, firewalls, and monitoring tools to detect and prevent security breaches.

By addressing these infrastructure requirements at each stage of the data workflow, the online retail company can ensure the efficiency, security, and reliability of their data architecture and operations.

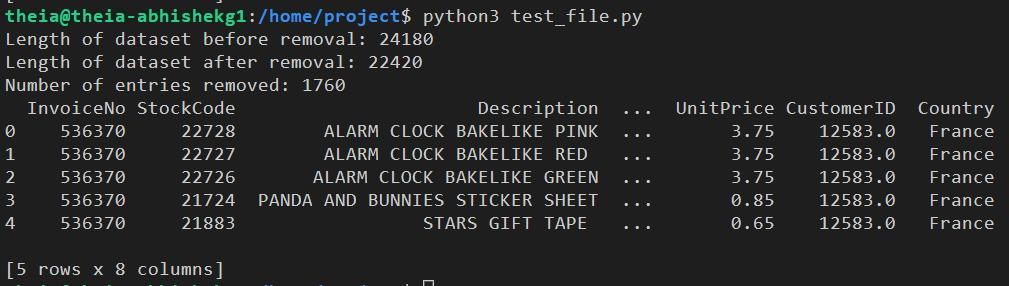

ETL Workflow

At this point, you can assume that the central data collection has taken place and the invoice details are available as a CSV file on remote server. You need to create an ETL pipeline that reads this file, cleans the data based on the client specifications and loads the data to a SQL server. Following specifications for this task have been shared.

Data

- InvoiceNo starting with the character

Cis a credit entry and should be removed from the record before analysis. - StockCode values of

C2,D,M, andPOSTcorrespond to Carraige, Discount, Manual and Postage entries, all of which are not required for our analysis. - There are a few entries where the CustomerID is missing. Such entries can be removed from the data before your analysis.

- Load the final transaction record to an SQLite3 database

Invoice_Recordsunder the tablePurchase_transactions.

Prompt 5

Create a prompt and use it on the generative AI platform to generate a Python code that can create the required data processing pipeline.

For the transactional data table, create a python code that does the following.

- Read the CSV data from a URL using Pandas

- Remove entries where

- InvoiceNo starts with the character ‘C’

- StockCode is either ‘M’, ‘D’, ‘C2’ or ‘POST’

- CustomerID is missing. Print the length of the dataset before and after removal.

- Load the final data to an SQLIte3 database named ‘Invoice_Records’ as a table ‘Purchase_transactions’.

- Run a sample query to display the first 5 rows of the table.

Response 5

import pandas as pd

import sqlite3

# Read the CSV data from a URL

url = "insert_your_csv_url_here"

data = pd.read_csv(url)

# Print the length of the dataset before removal

print("Length of dataset before removal:", len(data))

# Remove entries that meet the specified conditions

initial_length = len(data)

data = data[~data['InvoiceNo'].str.startswith('C')]

data = data[~data['StockCode'].isin(['M', 'D', 'C2', 'POST'])]

data = data.dropna(subset=['CustomerID'])

# Print the length of the dataset after removal

print("Length of dataset after removal:", len(data))

print("Number of entries removed:", initial_length - len(data))

# Load the final data to an SQLite3 database

conn = sqlite3.connect('Invoice_Records.db')

data.to_sql('Purchase_transactions', conn, if_exists='replace', index=False)

# Run a sample query to display the first 5 rows of the table

query_result = pd.read_sql_query("SELECT * FROM Purchase_transactions LIMIT 5;", conn)

print(query_result)

conn.close()Modify Code

import pandas as pd

import sqlite3

# Read the CSV data from a URL

url = "https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBMSkillsNetwork-AI0273EN-SkillsNetwork/labs/v1/m3/data/Project_data.csv"

data = pd.read_csv(url)

# Print the length of the dataset before removal

print("Length of dataset before removal:", len(data))

# Remove entries that meet the specified conditions

initial_length = len(data)

data = data[~data['InvoiceNo'].str.startswith('C')]

data = data[~data['StockCode'].isin(['M', 'D', 'C2', 'POST'])]

data = data.dropna(subset=['CustomerID'])

# Print the length of the dataset after removal

print("Length of dataset after removal:", len(data))

print("Number of entries removed:", initial_length - len(data))

# Load the final data to an SQLite3 database

conn = sqlite3.connect('Invoice_Records.db')

data.to_sql('Purchase_transactions', conn, if_exists='replace', index=False)

# Run a sample query to display the first 5 rows of the table

query_result = pd.read_sql_query("SELECT * FROM Purchase_transactions LIMIT 5;", conn)

print(query_result)

conn.close()

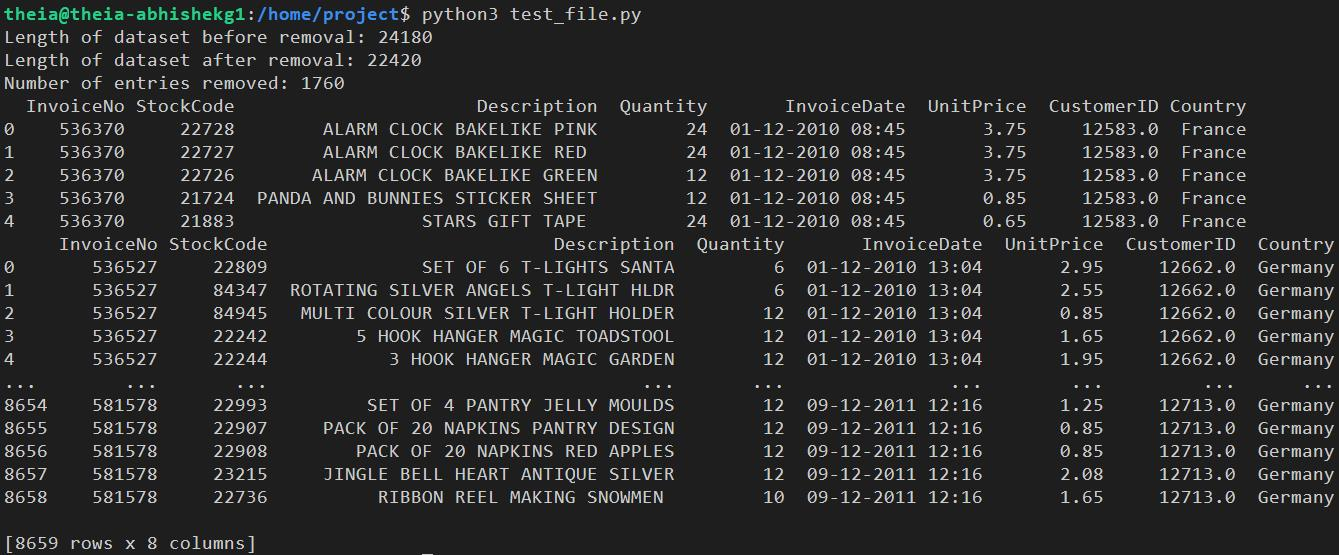

Querying DB

After the data is available on the central data repository, you can use SQL queries to extract the data directly into your Python coding interface. For the next part of your project, you are required to extract the data of a specific country–let’s use Germany. Use the generative AI model to create a code snippet that you can add to your previous code in the testing interface and that will run a SQL query on the data to extract the details transactions for the specified country.

Prompt 6

Create a python code that will extract all records from the table ‘Purchase_transactions’ under the database ‘Invoice_records’, such that the Country parameter is set to Germany. The resulting record should be saved in a dataframe.

Response 6

import sqlite3

import pandas as pd

# Connect to the database

conn = sqlite3.connect('Invoice_records.db')

# Execute a query to select all records from the 'Purchase_transactions' table with Country set to Germany

query = "SELECT * FROM Purchase_transactions WHERE Country = 'Germany'"

df = pd.read_sql(query, conn)

# Print the dataframe

print(df)

# Close the connection

conn.close()Modify Code

import pandas as pd

import sqlite3

# Read the CSV data from a URL

url = "https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBMSkillsNetwork-AI0273EN-SkillsNetwork/labs/v1/m3/data/Project_data.csv"

data = pd.read_csv(url)

# Print the length of the dataset before removal

print("Length of dataset before removal:", len(data))

# Remove entries that meet the specified conditions

initial_length = len(data)

data = data[~data['InvoiceNo'].str.startswith('C')]

data = data[~data['StockCode'].isin(['M', 'D', 'C2', 'POST'])]

data = data.dropna(subset=['CustomerID'])

# Print the length of the dataset after removal

print("Length of dataset after removal:", len(data))

print("Number of entries removed:", initial_length - len(data))

# Load the final data to an SQLite3 database

conn = sqlite3.connect('Invoice_Records.db')

data.to_sql('Purchase_transactions', conn, if_exists='replace', index=False)

# Run a sample query to display the first 5 rows of the table

query_result = pd.read_sql_query("SELECT * FROM Purchase_transactions LIMIT 5;", conn)

print(query_result)

query = "SELECT * FROM Purchase_transactions WHERE Country IN ('Germany')"

records = pd.read_sql(query, conn)

# Print the extracted records

print(records)

conn.close()

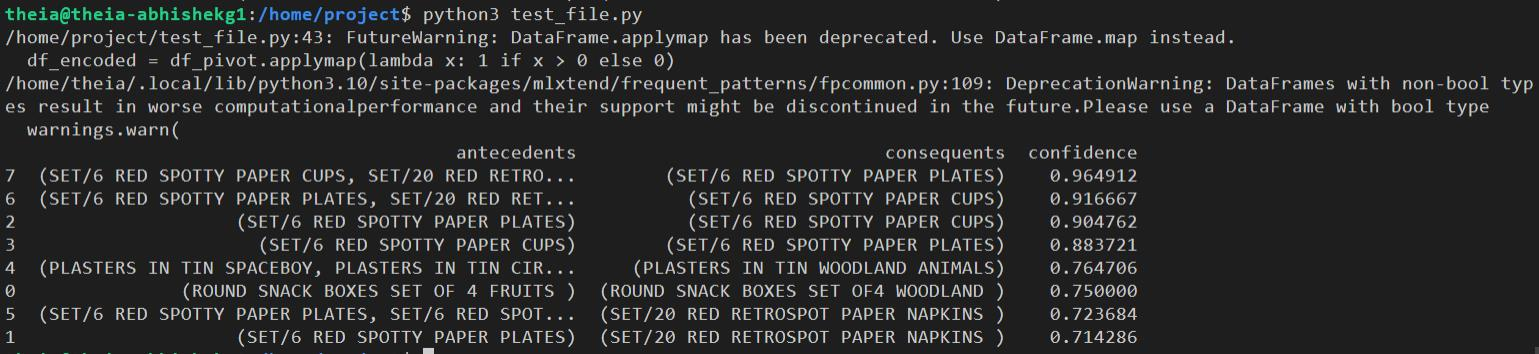

Data Analysis & Mining

One of the most relevant and important techniques for analyzing transactional data is association rule mining. You are required to implement an Apriori algorithm to mine association rules from the data extracted in the previous step. As a result, you will be able to identify the items that have the most likelihood to be purchased together. This information is necessary for the company to efficiently develop their marketing and advertising strategies.

You can use generative AI to create the code for implementation. You can write a prompt that creates code for the implementation of Apriori algorithm for association rule mining on the extracted data frame.

Consider the following prompt that describes the different steps involved in implementing an Apriori algorithm.

Prompt 7

For the data frame extracted here, write a python code to execute the apriori algorithm and extract association rules for the given set of invoices. For this outcome, perform the following steps:

- Group the records by InvoiceID and Description, along with their total quantities

- Unpack the data into a table, making the InvoiceNo, the row indexes, Unique descriptions as column indexes and the total quantities, the values.

- Apply one-hot encoding on this table, making the value True if the Item description existed in the invoice and False if it didn’t exist in the invoice.

- Perfrom Apriori algorithm on this data and extract the required association rules.

Response 7

- Group the records by InvoiceID and Description, along with their total quantities:

import sqlite3

import pandas as pd

# Connect to the database

conn = sqlite3.connect('Invoice_records.db')

# Execute a query to select all records from the 'Purchase_transactions' table

query = "SELECT InvoiceID, Description, SUM(Quantity) AS TotalQuantity FROM Purchase_transactions GROUP BY InvoiceID, Description"

df_grouped = pd.read_sql_query(query, conn)

# Close the connection

conn.close()- Unpack the data into a table with the InvoiceNo” items as row indexes, Unique descriptions as column indexes, and total quantities as values

df_pivot = df_grouped.pivot(index='InvoiceNo', columns='Description', values='TotalQuantity').fillna(0)- Apply one-hot encoding on this table:

df_encoded = df_pivot.applymap(lambda x: 1 if x > 0 else 0)- Perform the Apriori algorithm using this data and extract the association rules:

from mlxtend.frequent_patterns import apriori

from mlxtend.frequent_patterns import association_rules

# Apply the Apriori algorithm to find frequent itemsets

frequent_itemsets = apriori(df_encoded, min_support=0.1, use_colnames=True)

# Generate association rules from the frequent itemsets

rules = association_rules(frequent_itemsets, metric="confidence", min_threshold=0.7)

# Print the association rules

print(rules)Modify Code

import pandas as pd

import sqlite3

from mlxtend.frequent_patterns import apriori

from mlxtend.frequent_patterns import association_rules

# Read the CSV data from a URL

url = "https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBMSkillsNetwork-AI0273EN-SkillsNetwork/labs/v1/m3/data/Project_data.csv"

data = pd.read_csv(url)

# Print the length of the dataset before removal

#print("Length of data set before removal:", len(data))

# Remove entries that meet the specified conditions

initial_length = len(data)

data = data[~data['InvoiceNo'].str.startswith('C')]

data = data[~data['StockCode'].isin(['M', 'D', 'C2', 'POST'])]

data = data.dropna(subset=['CustomerID'])

# Print the length of the data set after removal

#print("Length of data set after removal:", len(data))

#print("Number of entries removed:", initial_length - len(data))

# Load the final data to an SQLite3 database

conn = sqlite3.connect('Invoice_Records.db')

data.to_sql('Purchase_transactions', conn, if_exists='replace', index=False)

# Run a sample query to display the first 5 rows of the table

query_result = pd.read_sql_query("SELECT * FROM Purchase_transactions LIMIT 5;", conn)

#print(query_result)

query = "SELECT * FROM Purchase_transactions WHERE Country IN ('Germany')"

records = pd.read_sql(query, conn)

# Print the extracted records

#print(records)

# Execute a query to select all records from the 'Purchase_transactions' table

query = "SELECT InvoiceNo, Description, SUM(Quantity) AS TotalQuantity FROM Purchase_transactions GROUP BY InvoiceNo, Description"

df_grouped = pd.read_sql(query, conn)

df_pivot = df_grouped.pivot(index='InvoiceNo', columns='Description', values='TotalQuantity').fillna(0)

df_encoded = df_pivot.applymap(lambda x: 1 if x > 0 else 0)

# Apply the Apriori algorithm to find frequent itemsets

frequent_itemsets = apriori(df_encoded, min_support=0.05, use_colnames=True)

# Generate association rules from the frequent itemsets

rules = association_rules(frequent_itemsets, metric="confidence", min_threshold=0.7)

# Sort the association rules in descending order of confidence

rules = rules.sort_values(by='confidence', ascending=False)

# Print the association rules

print(rules[['antecedents','consequents','confidence']])

conn.close()