Llama Setup

Local Computer

The smaller Llama 2 or 3 chat model is free to download on your own machine!

- Note that only the Llama 2 7B chat or Llama 3 8B model (by default the 4-bit quantized version is downloaded) may work fine locally.

- Other larger sized models could require too much memory (13b models generally require at least 16GB of RAM and 70b models at least 64GB of RAM) and run too slowly.

- The Meta team still recommends using a hosted API service (in this case, the classroom is using Together.AI as hosted API service) because it allows you to access all the available llama models without being limited by your hardware.

- You can find more instructions on using the Together.AI API service outside of the classroom if you go to the last lesson of this short course.

- One way to install and use llama 7B on your computer is to go to https://ollama.com/ and download app. It will be like installing a regular application.

- To use Llama 2 or 3, the full instructions are here: https://ollama.com/library/llama2 and https://ollama.com/library/llama3.

Summary

- Follow the installation instructions (for Windows, Mac or Linux).

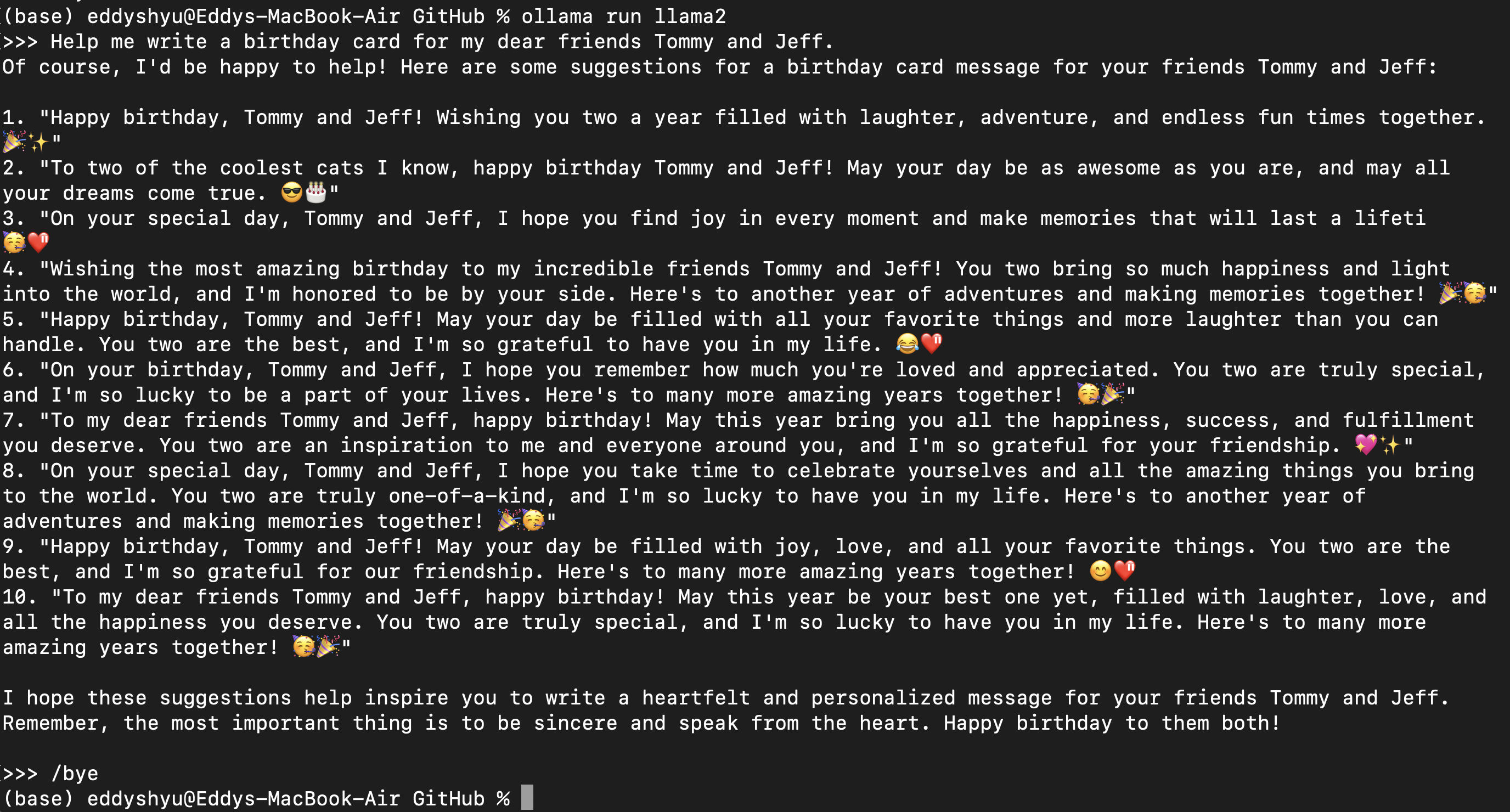

- Open the command line interface (CLI) and type

ollama run llama2orollama run llama3. - The first time you do this, it will take some time to download the llama 2 or 3 model. After that, you’ll see

>>> Send a message (/? for help)

- You can type your prompt and the llama-2 model on your computer will give you a response!

- To exit, type

/bye. - For a list of other commands, type

/?.