def putTextRect(img, text, pos, scale=3, thickness=3, colorT=(255, 255, 255),

colorR=(255, 0, 255), font=cv2.FONT_HERSHEY_PLAIN,

offset=10, border=None, colorB=(0, 255, 0)):

"""

Creates Text with Rectangle Background

:param img: Image to put text rect on

:param text: Text inside the rect

:param pos: Starting position of the rect x1,y1

:param scale: Scale of the text

:param thickness: Thickness of the text

:param colorT: Color of the Text

:param colorR: Color of the Rectangle

:param font: Font used. Must be cv2.FONT....

:param offset: Clearance around the text

:param border: Outline around the rect

:param colorB: Color of the outline

:return: image, rect (x1,y1,x2,y2)

"""

ox, oy = pos

(w, h), _ = cv2.getTextSize(text, font, scale, thickness)

x1, y1, x2, y2 = ox - offset, oy + offset, ox + w + offset, oy - h - offset

cv2.rectangle(img, (x1, y1), (x2, y2), colorR, cv2.FILLED)

if border is not None:

cv2.rectangle(img, (x1, y1), (x2, y2), colorB, border)

cv2.putText(img, text, (ox, oy), font, scale, colorT, thickness)

return img, [x1, y2, x2, y1]Y8 Video

Let’s use the same model and code from the previous page that we used on images and run it on video using our own computer CPU.

Use 8l on CPU

Instead of a live camera feed and images we covered on the previous two pages, we will repeat it all with a video using our local CPU first.

Manual BB

If we want to manually create the boxes using the function

from ultralytics import YOLO

import cv2

#import cvzone

import math

import time

#cap = cv2.VideoCapture(0) # For Webcam cannot size for videos

#cap.set(3, 1280)

#cap.set(4, 720)

cap = cv2.VideoCapture("../motorbikes-1.mp4") # For Video

win_name = "Motorbikes-1"

model = YOLO("../Yolo-Weights/yolov8l.pt")

# List of Class names

classNames = ["person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck", "boat",

"traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat",

"dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella",

"handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup",

"fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli",

"carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant", "bed",

"diningtable", "toilet", "tvmonitor", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

"teddy bear", "hair drier", "toothbrush"

]

while cap.isOpened():

#Read frame from video

success, frame = cap.read()

if success: #if frame is read successfully set the results of the model on the frame

results = model(frame, stream=True)

# Insert Box Extraction section here

for r in results:

boxes = r.boxes

for box in boxes:

x1,y1,x2,y2 = box.xyxy[0]

x1,y1,x2,y2 = int(x1),int(y1),int(x2),int(y2) # convert values to integers

cv2.rectangle(frame, (x1,y1), (x2,y2), (0,255,0), 3)

# we can also use a function from cvzone/utils.py called

#cvzone.cornerRect(img,(x1,y1,w,h))

# extract the confidence level

conf = math.ceil(box.conf[0]*100)/100

# extract class ID

cls = int(box.cls[0])

# display both conf & class ID on frame

putTextRect(frame,f'{conf} {classNames[cls]}',(max(0,x1), max(35,y1)))

# To edit the size & thickness of text use

#putTextRect(frame,f'{classNames[cls]} {conf}',(max(0, x1),max(35,y1)), scale = 2, thickness=1)

cv2.imshow(win_name, frame)

if cv2.waitKey(1) == 27:

break # if user breaks with ESC key

else:

break # if end of video is reached

# Release video capture object and close display window

cap.release()

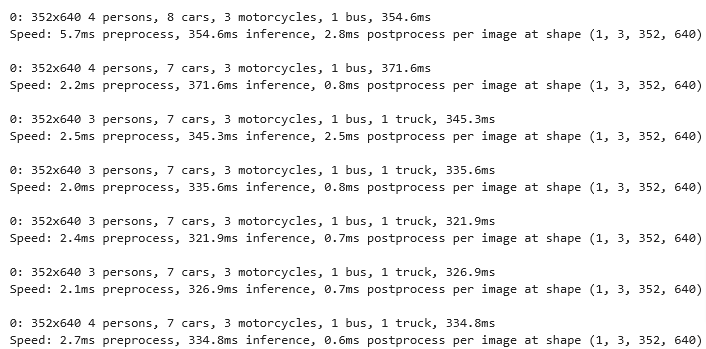

cv2.destroyAllWindows()- In addition to the video being labeled and shown we also get this output

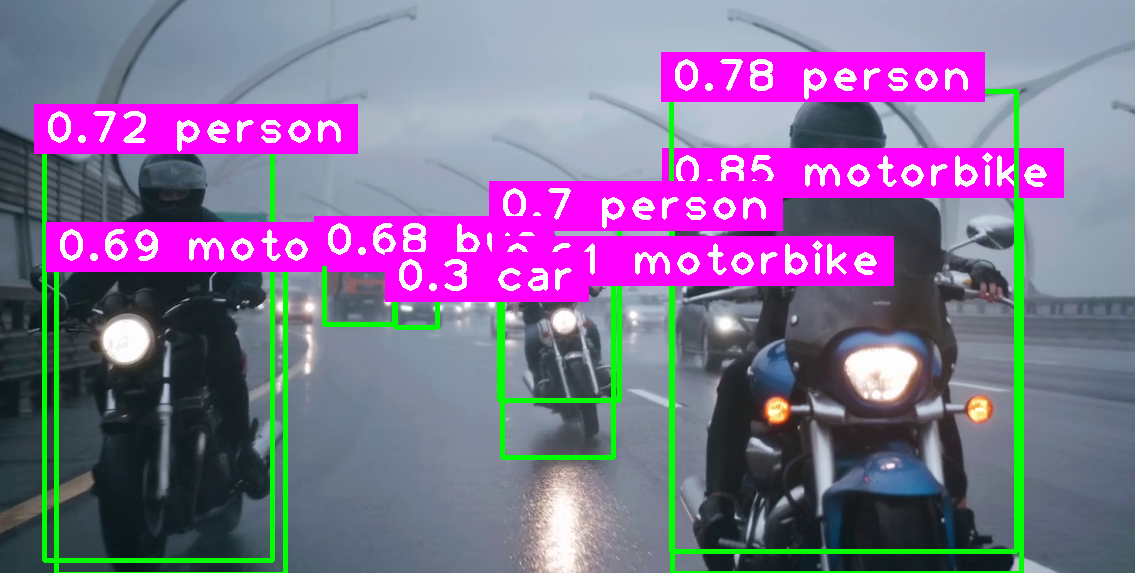

Use 8n on CPU

from ultralytics import YOLO

import cv2

#import cvzone

import math

import time

#cap = cv2.VideoCapture(0) # For Webcam cannot size for videos

#cap.set(3, 1280)

#cap.set(4, 720)

cap = cv2.VideoCapture("../motorbikes-1.mp4") # For Video

win_name = "Motorbikes-1"

model = YOLO("../Yolo-Weights/yolov8n.pt")

# List of Class names

classNames = ["person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck", "boat",

"traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat",

"dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella",

"handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup",

"fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli",

"carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant", "bed",

"diningtable", "toilet", "tvmonitor", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

"teddy bear", "hair drier", "toothbrush"

]

while cap.isOpened():

#Read frame from video

success, frame = cap.read()

if success: #if frame is read successfully set the results of the model on the frame

results = model(frame, stream=True)

# Insert Box Extraction section here

for r in results:

boxes = r.boxes

for box in boxes:

x1,y1,x2,y2 = box.xyxy[0]

x1,y1,x2,y2 = int(x1),int(y1),int(x2),int(y2) # convert values to integers

cv2.rectangle(frame, (x1,y1), (x2,y2), (0,255,0), 3)

# we can also use a function from cvzone/utils.py called

#cvzone.cornerRect(img,(x1,y1,w,h))

# extract the confidence level

conf = math.ceil(box.conf[0]*100)/100

# extract class ID

cls = int(box.cls[0])

# display both conf & class ID on frame

putTextRect(frame,f'{conf} {classNames[cls]}',(max(0,x1), max(35,y1)))

# To edit the size & thickness of text use

#putTextRect(frame,f'{classNames[cls]} {conf}',(max(0, x1),max(35,y1)), scale = 2, thickness=1)

cv2.imshow(win_name, frame)

if cv2.waitKey(1) == 27:

break # if user breaks with ESC key

else:

break # if end of video is reached

# Release video capture object and close display window

cap.release()

cv2.destroyAllWindows()

Y8 Built-in

If we use the built-in functions to annotate the boxes, classes and id’s we write it as

from ultralytics import YOLO

import cv2

import math

# Set 0 for the ID of the default camera

#cap = cv2.VideoCapture(0)

cap = cv2.VideoCapture("../motorbikes-1.mp4") # For Video

# Add model to evaluate the video capture

model = YOLO('../Yolo-Weights/yolov8l.pt')

while cap.isOpened():

#Read frame from video

success, frame = cap.read()

if success: #if frame is read successfully set the results of the model on the frame

results = model.track(frame, stream = True, persist = True)

# visusalize the results on the frame

for res in results:

annotated_frame = res.plot()

print(annotated_frame)

# display the annotated frame

cv2.imshow("ShaYaSha", annotated_frame)

if cv2.waitKey(1) == 27:

break # if user breaks with ESC key

else:

break # if end of video is reached

# Release video capture object and close display window

cap.release()

cv2.destroyAllWindows()

# OUTPUT

0: 352x640 2 persons, 3 cars, 2 motorcycles, 747.6ms

Speed: 4.0ms preprocess, 747.6ms inference, 4.0ms postprocess per image at shape (1, 3, 352, 640)

[[[144 128 108]

[144 128 108]

[144 128 108]

...

[173 156 138]

[173 156 138]

[173 156 138]]

[[144 128 108]

[144 128 108]

[144 128 108]

...

[173 156 138]

[173 156 138]

[173 156 138]]

[[144 128 108]

[144 128 108]

[144 128 108]

...

[173 156 138]

[173 156 138]

[173 156 138]]

...

[[ 61 56 36]

[ 62 57 37]

[ 61 56 36]

...

[ 21 17 5]

[ 21 17 5]

[ 21 17 5]]

[[ 62 57 37]

[ 61 56 36]

[ 61 56 36]

...

[ 19 16 1]

[ 19 16 1]

[ 19 16 1]]

[[ 61 56 36]

[ 61 56 36]

[ 61 56 36]

...

[ 19 16 1]

[ 19 16 1]

[ 19 16 1]]]

0: 352x640 2 persons, 3 cars, 2 motorcycles, 631.3ms

Speed: 3.0ms preprocess, 631.3ms inference, 2.0ms postprocess per image at shape (1, 3, 352, 640)

[[[145 129 109]

[146 130 110]

[146 130 110]

...

# WITHOUT PRINTING THE BOXES

0: 352x640 2 persons, 3 cars, 2 motorcycles, 720.0ms

Speed: 4.0ms preprocess, 720.0ms inference, 2.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 2 persons, 3 cars, 2 motorcycles, 583.2ms

Speed: 3.6ms preprocess, 583.2ms inference, 2.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 2 persons, 5 cars, 3 motorcycles, 669.9ms

Speed: 3.0ms preprocess, 669.9ms inference, 1.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 2 persons, 5 cars, 3 motorcycles, 530.2ms

Speed: 4.0ms preprocess, 530.2ms inference, 2.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 2 persons, 5 cars, 3 motorcycles, 678.5ms

Speed: 3.3ms preprocess, 678.5ms inference, 1.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 2 persons, 5 cars, 3 motorcycles, 671.8ms

Speed: 3.0ms preprocess, 671.8ms inference, 1.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 2 persons, 5 cars, 3 motorcycles, 668.2ms

Speed: 4.0ms preprocess, 668.2ms inference, 1.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 2 persons, 5 cars, 3 motorcycles, 668.5ms

Speed: 5.0ms preprocess, 668.5ms inference, 1.0ms postprocess per image at shape (1, 3, 352, 640)Print Boxes

for r in results:

boxes = r.boxes

for box in boxes:

x1,y1,x2,y2 = box.xyxy[0]

print('x1x2y1y2',x1,y1,x2,y2)

cls = int(box.cls[0])

print('cls -->',cls)

print('box[0] -->',box[0])

print('box.cls -->',box.cls)

print('box.conf -->',box.conf)x1x2y1y2 tensor(11.5496) tensor(150.4709) tensor(551.4695) tensor(479.8398)

cls --> 0

box[0] --> ultralytics.engine.results.Boxes object with attributes:

cls: tensor([0.])

conf: tensor([0.8929])

data: tensor([[ 11.5496, 150.4709, 551.4695, 479.8398, 0.8929, 0.0000]])

id: None

is_track: False

orig_shape: (480, 640)

shape: torch.Size([1, 6])

xywh: tensor([[281.5096, 315.1554, 539.9199, 329.3690]])

xywhn: tensor([[0.4399, 0.6566, 0.8436, 0.6862]])

xyxy: tensor([[ 11.5496, 150.4709, 551.4695, 479.8398]])

xyxyn: tensor([[0.0180, 0.3135, 0.8617, 0.9997]])

box.cls --> tensor([0.])

box.conf --> tensor([0.8929])Video on GPU

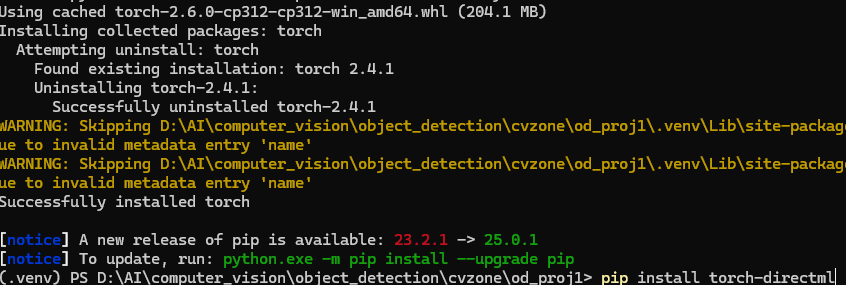

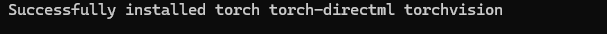

Make sure these are installed inside the venv

Torch-directml

I don’t see any difference and in the onnx instance it was much slower. In Linux it wasn’t compatible so my guess I need a different GPU

pip install --upgrade torch ultralytics

pip install torch-directml

Add this to code to test GPU

import torch_directml

device = torch_directml.device()

model.to(device)

# the above code should be before we call the modelfrom ultralytics import YOLO

import cv2

import math

import time

import torch_directml

cap = cv2.VideoCapture("../motorbikes-1.mp4") # For Video

win_name = "Motorbikes-1"

# Add this to use local AMD Radeon GPU

device = torch_directml.device()

model.to(device)

model = YOLO("../Yolo-Weights/yolov8l.pt")

# List of Class names

classNames = ["person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck", "boat",

"traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat",

"dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella",

"handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup",

"fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli",

"carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant", "bed",

"diningtable", "toilet", "tvmonitor", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

"teddy bear", "hair drier", "toothbrush"

]

while cap.isOpened():

#Read frame from video

success, frame = cap.read()

if success: #if frame is read successfully set the results of the model on the frame

results = model(frame, stream=True)

# Insert Box Extraction section here

for r in results:

boxes = r.boxes

for box in boxes:

x1,y1,x2,y2 = box.xyxy[0]

x1,y1,x2,y2 = int(x1),int(y1),int(x2),int(y2) # convert values to integers

cv2.rectangle(frame, (x1,y1), (x2,y2), (0,255,0), 3)

# we can also use a function from cvzone/utils.py called

#cvzone.cornerRect(img,(x1,y1,w,h))

# extract the confidence level

conf = math.ceil(box.conf[0]*100)/100

# extract class ID

cls = int(box.cls[0])

# display both conf & class ID on frame

putTextRect(frame,f'{conf} {classNames[cls]}',(max(0,x1), max(35,y1)))

# To edit the size & thickness of text use

#putTextRect(frame,f'{classNames[cls]} {conf}',(max(0, x1),max(35,y1)), scale = 2, thickness=1)

cv2.imshow(win_name, frame)

if cv2.waitKey(1) == 27:

break # if user breaks with ESC key

else:

break # if end of video is reached

# Release video capture object and close display window

cap.release()

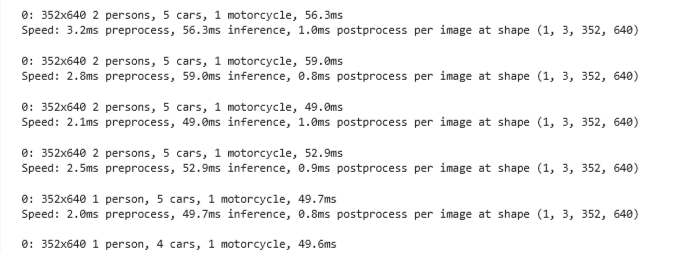

cv2.destroyAllWindows()0: 352x640 4 persons, 8 cars, 3 motorcycles, 1 bus, 387.5ms

Speed: 2.9ms preprocess, 387.5ms inference, 2.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 4 persons, 7 cars, 3 motorcycles, 1 bus, 383.2ms

Speed: 2.1ms preprocess, 383.2ms inference, 0.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 3 persons, 7 cars, 3 motorcycles, 1 bus, 1 truck, 373.6ms

Speed: 2.0ms preprocess, 373.6ms inference, 2.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 3 persons, 7 cars, 3 motorcycles, 1 bus, 1 truck, 368.1ms

Speed: 3.0ms preprocess, 368.1ms inference, 2.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 3 persons, 7 cars, 3 motorcycles, 1 bus, 1 truck, 340.1ms

Speed: 3.0ms preprocess, 340.1ms inference, 0.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 3 persons, 7 cars, 3 motorcycles, 1 bus, 1 truck, 332.1ms

Speed: 0.0ms preprocess, 332.1ms inference, 0.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 4 persons, 7 cars, 3 motorcycles, 1 bus, 1 truck, 412.7ms

Speed: 2.0ms preprocess, 412.7ms inference, 1.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 3 persons, 8 cars, 3 motorcycles, 1 bus, 2 trucks, 344.4ms

Speed: 1.9ms preprocess, 344.4ms inference, 1.7ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 3 persons, 8 cars, 3 motorcycles, 1 bus, 2 trucks, 381.1ms

Speed: 2.2ms preprocess, 381.1ms inference, 1.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 4 persons, 8 cars, 3 motorcycles, 1 bus, 1 truck, 353.3ms

Speed: 0.7ms preprocess, 353.3ms inference, 1.2ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 4 persons, 7 cars, 3 motorcycles, 1 bus, 359.9ms

Speed: 3.9ms preprocess, 359.9ms inference, 0.0ms postprocess per image at shape (1, 3, 352, 640)Onnx & GPU

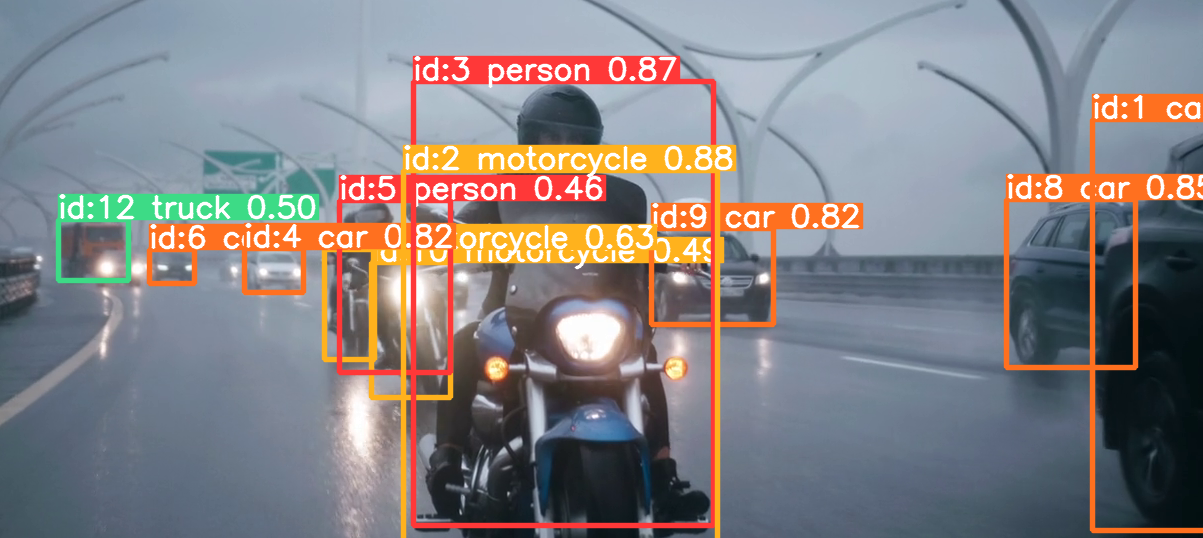

This is using onnixruntime-directml which was slower than CPU - my guess is the GPU is not suited for ML

cap = cv2.VideoCapture("../motorbikes-1.mp4") # For Video

win_name = "Motorbikes-1"

model = YOLO("../Yolo-Weights/yolov8l.pt")

# Add this to use local AMD Radeon GPU

model.export(format="onnx")

# List of Class names

classNames = ["person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck", "boat",

"traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat",

"dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella",

"handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup",

"fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli",

"carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant", "bed",

"diningtable", "toilet", "tvmonitor", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

"teddy bear", "hair drier", "toothbrush"

]

while cap.isOpened():

#Read frame from video

success, frame = cap.read()

if success: #if frame is read successfully set the results of the model on the frame

results = model(frame, stream=True)

# Insert Box Extraction section here

for r in results:

boxes = r.boxes

for box in boxes:

x1,y1,x2,y2 = box.xyxy[0]

x1,y1,x2,y2 = int(x1),int(y1),int(x2),int(y2) # convert values to integers

cv2.rectangle(frame, (x1,y1), (x2,y2), (0,255,0), 3)

# we can also use a function from cvzone/utils.py called

#cvzone.cornerRect(img,(x1,y1,w,h))

# extract the confidence level

conf = math.ceil(box.conf[0]*100)/100

# extract class ID

cls = int(box.cls[0])

# display both conf & class ID on frame

putTextRect(frame,f'{conf} {classNames[cls]}',(max(0,x1), max(35,y1)))

# To edit the size & thickness of text use

#putTextRect(frame,f'{classNames[cls]} {conf}',(max(0, x1),max(35,y1)), scale = 2, thickness=1)

cv2.imshow(win_name, frame)

if cv2.waitKey(1) == 27:

break # if user breaks with ESC key

else:

break # if end of video is reached

# Release video capture object and close display window

cap.release()

cv2.destroyAllWindows()D:\AI\computer_vision\object_detection\cvzone\od_proj1\.venv\Lib\site-packages\ultralytics\nn\tasks.py:527: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

return torch.load(file, map_location='cpu'), file # load

Ultralytics YOLOv8.0.184 Python-3.12.9 torch-2.4.1+cu118 CPU (AMD Ryzen 5 7530U with Radeon Graphics)

YOLOv8l summary (fused): 268 layers, 43668288 parameters, 0 gradients, 165.2 GFLOPs

PyTorch: starting from '..\Yolo-Weights\yolov8l.pt' with input shape (1, 3, 640, 640) BCHW and output shape(s) (1, 84, 8400) (83.7 MB)

ONNX: starting export with onnx 1.17.0 opset 19...

ONNX: export success 3.8s, saved as '..\Yolo-Weights\yolov8l.onnx' (166.8 MB)

Export complete (10.9s)

Results saved to D:\AI\computer_vision\object_detection\cvzone\Yolo-Weights

Predict: yolo predict task=detect model=..\Yolo-Weights\yolov8l.onnx imgsz=640

Validate: yolo val task=detect model=..\Yolo-Weights\yolov8l.onnx imgsz=640 data=coco.yaml

Visualize: https://netron.app

0: 352x640 4 persons, 8 cars, 3 motorcycles, 1 bus, 413.8ms

Speed: 8.1ms preprocess, 413.8ms inference, 1.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 4 persons, 7 cars, 3 motorcycles, 1 bus, 439.3ms

Speed: 2.1ms preprocess, 439.3ms inference, 1.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 3 persons, 7 cars, 3 motorcycles, 1 bus, 1 truck, 429.1ms

Speed: 2.5ms preprocess, 429.1ms inference, 0.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 3 persons, 7 cars, 3 motorcycles, 1 bus, 1 truck, 390.1ms

Speed: 3.1ms preprocess, 390.1ms inference, 2.0ms postprocess per image at shape (1, 3, 352, 640)

0: 352x640 3 persons, 7 cars, 3 motorcycles, 1 bus, 1 truck, 374.5ms

Speed: 0.0ms preprocess, 374.5ms inference, 2.0ms postprocess per image at shape (1, 3, 352, 640)AMD - Linux

Check Ubuntu version

~$ lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 22.04.5 LTS

Release: 22.04

Codename: jammyUpdate OS

sudo apt-get update

sudo apt-get dist-upgradeInstall AMD driver package

wget https://repo.radeon.com/amdgpu-install/6.2.3/ubuntu/jammy/amdgpu-install_6.2.60203-1_all.deb

sudo apt install ./amdgpu-install_6.2.60203-1_all.debDisplay usecase

sudo amdgpu-install --list-usecaseInstall Open source graphics and ROCm

amdgpu-install -y --usecase=wsl,rocm --no-dkmsCheck GPU is listed

$ rocminfo

WSL environment detected. ROCR: unsupported GPU