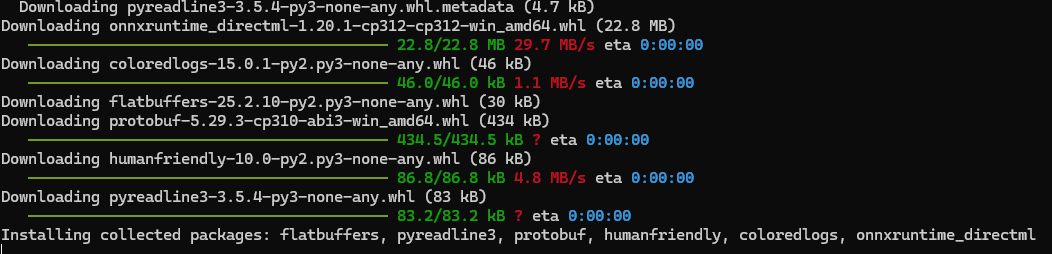

pip install onnxruntime-directml

# for me

(.venv) PS D:~\od_proj1>pip install onnxruntime-directmlYOLO - Reference

I’ll list all these references on this page, they might not mean much at this time but I’ll refer to them as we progress.

Here are some links to the documents and other at ultralytics https://docs.ultralytics.com/ and proceed to reference which is the quickest to retrieve a subject.

GPU

Let’s start by checking to see if we can reach the local GPU on my windows 11 system to use for running inference for YOLO models.

I am aware as of the date of this writing that YOLO does not allow training their models on AMD Radeon GPUs. It is only compatible with NVIDIA GPUs.

Here are the steps to test if it will run inference on my local AMD Radeon GPU.

1- install the DirectML version of ONNX. It’s crucial to choose ONNX DirectML over any other variants or versions. The Python package you need is aptly named “onnxruntime_directml”. Feel free to use:

Make sure you install it in the venv you are using for your project

2- render your YOLO model into the ONNX format.

from ultralytics import YOLO

model = YOLO('yolov8n.pt')

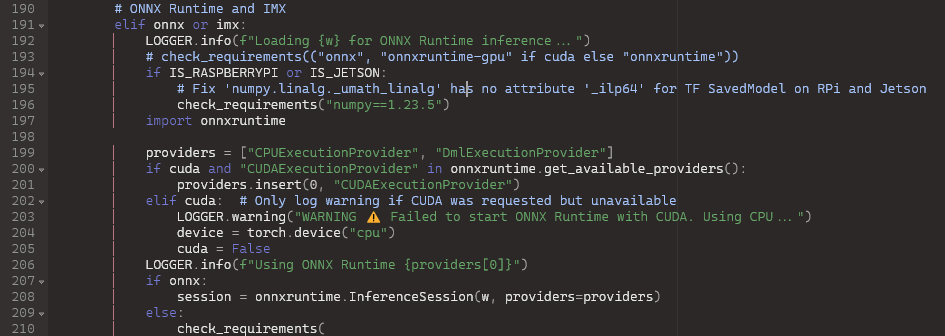

model.export(format='onnx')3- Add the ‘DmlExecutionProvider’ string to the providers list: this is lines 133 to 140 in “.venv\Lib\site-packages\ultralytics\nn\autobackend.py”:

I guess step 3 should be step 2. Make sure you comment out line 135 as shown below

133 elif onnx: # ONNX Runtime

134 LOGGER.info(f'Loading {w} for ONNX Runtime inference...')

135 # check_requirements(('onnx', 'onnxruntime-gpu' if cuda else 'onnxruntime'))

136 import onnxruntime

137 providers = ['CUDAExecutionProvider', 'CPUExecutionProvider'] if cuda else ['DmlExecutionProvider', 'CPUExecutionProvider']

138 session = onnxruntime.InferenceSession(w, providers=providers)

139 output_names = [x.name for x in session.get_outputs()]

140 metadata = session.get_modelmeta().custom_metadata_map # metadataThe lines in my file are at different location

Arguments

from ultralytics import YOLO

# Load a pretrained YOLO11n model

model = YOLO("yolo11n.pt")

# Run inference on 'bus.jpg' with arguments

model.predict("bus.jpg", save=True, imgsz=320, conf=0.5)In order to run the model we use model.predict() which accepts multiple arguments

Inference arguments

| Argument | Type | Default | Description |

|---|---|---|---|

source |

str |

'ultralytics/assets' |

Specifies the data source for inference. Can be an image path, video file, directory, URL, or device ID for live feeds. Supports a wide range of formats and sources, enabling flexible application across different types of input. |

conf |

float |

0.25 |

Sets the minimum confidence threshold for detections. Objects detected with confidence below this threshold will be disregarded. Adjusting this value can help reduce false positives. |

iou |

float |

0.7 |

Intersection Over Union (IoU) threshold for Non-Maximum Suppression (NMS). Lower values result in fewer detections by eliminating overlapping boxes, useful for reducing duplicates. |

imgsz |

int or tuple |

640 |

Defines the image size for inference. Can be a single integer 640 for square resizing or a (height, width) tuple. Proper sizing can improve detection accuracy and processing speed. |

half |

bool |

False |

Enables half-precision (FP16) inference, which can speed up model inference on supported GPUs with minimal impact on accuracy. |

device |

str |

None |

Specifies the device for inference (e.g., cpu, cuda:0 or 0). Allows users to select between CPU, a specific GPU, or other compute devices for model execution. |

batch |

int |

1 |

Specifies the batch size for inference (only works when the source is a directory, video file or .txt file). A larger batch size can provide higher throughput, shortening the total amount of time required for inference. |

max_det |

int |

300 |

Maximum number of detections allowed per image. Limits the total number of objects the model can detect in a single inference, preventing excessive outputs in dense scenes. |

vid_stride |

int |

1 |

Frame stride for video inputs. Allows skipping frames in videos to speed up processing at the cost of temporal resolution. A value of 1 processes every frame, higher values skip frames. |

stream_buffer |

bool |

False |

Determines whether to queue incoming frames for video streams. If False, old frames get dropped to accommodate new frames (optimized for real-time applications). If `True’, queues new frames in a buffer, ensuring no frames get skipped, but will cause latency if inference FPS is lower than stream FPS. |

visualize |

bool |

False |

Activates visualization of model features during inference, providing insights into what the model is “seeing”. Useful for debugging and model interpretation. |

augment |

bool |

False |

Enables test-time augmentation (TTA) for predictions, potentially improving detection robustness at the cost of inference speed. |

agnostic_nms |

bool |

False |

Enables class-agnostic Non-Maximum Suppression (NMS), which merges overlapping boxes of different classes. Useful in multi-class detection scenarios where class overlap is common. |

classes |

list[int] |

None |

Filters predictions to a set of class IDs. Only detections belonging to the specified classes will be returned. Useful for focusing on relevant objects in multi-class detection tasks. |

retina_masks |

bool |

False |

Returns high-resolution segmentation masks. The returned masks (masks.data) will match the original image size if enabled. If disabled, they have the image size used during inference. |

embed |

list[int] |

None |

Specifies the layers from which to extract feature vectors or embeddings. Useful for downstream tasks like clustering or similarity search. |

project |

str |

None |

Name of the project directory where prediction outputs are saved if save is enabled. |

name |

str |

None |

Name of the prediction run. Used for creating a subdirectory within the project folder, where prediction outputs are stored if save is enabled. |

Visualization arguments

| Argument | Type | Default | Description |

|---|---|---|---|

show |

bool |

False |

If True, displays the annotated images or videos in a window. Useful for immediate visual feedback during development or testing. |

save |

bool |

False or True |

Enables saving of the annotated images or videos to file. Useful for documentation, further analysis, or sharing results. Defaults to True when using CLI & False when used in Python. |

save_frames |

bool |

False |

When processing videos, saves individual frames as images. Useful for extracting specific frames or for detailed frame-by-frame analysis. |

save_txt |

bool |

False |

Saves detection results in a text file, following the format [class] [x_center] [y_center] [width] [height] [confidence]. Useful for integration with other analysis tools. |

save_conf |

bool |

False |

Includes confidence scores in the saved text files. Enhances the detail available for post-processing and analysis. |

save_crop |

bool |

False |

Saves cropped images of detections. Useful for dataset augmentation, analysis, or creating focused datasets for specific objects. |

show_labels |

bool |

True |

Displays labels for each detection in the visual output. Provides immediate understanding of detected objects. |

show_conf |

bool |

True |

Displays the confidence score for each detection alongside the label. Gives insight into the model’s certainty for each detection. |

show_boxes |

bool |

True |

Draws bounding boxes around detected objects. Essential for visual identification and location of objects in images or video frames. |

line_width |

None or int |

None |

Specifies the line width of bounding boxes. If None, the line width is automatically adjusted based on the image size. Provides visual customization for clarity. |

Images

| Image Suffixes | Example Predict Command | Reference |

|---|---|---|

.bmp |

yolo predict source=image.bmp |

Microsoft BMP File Format |

.dng |

yolo predict source=image.dng |

Adobe DNG |

.jpeg |

yolo predict source=image.jpeg |

JPEG |

.jpg |

yolo predict source=image.jpg |

JPEG |

.mpo |

yolo predict source=image.mpo |

Multi Picture Object |

.png |

yolo predict source=image.png |

Portable Network Graphics |

.tif |

yolo predict source=image.tif |

Tag Image File Format |

.tiff |

yolo predict source=image.tiff |

Tag Image File Format |

.webp |

yolo predict source=image.webp |

WebP |

.pfm |

yolo predict source=image.pfm |

Portable FloatMap |

.HEIC |

yolo predict source=image.HEIC |

High Efficiency Image Format |

Videos

| Video Suffixes | Example Predict Command | Reference |

|---|---|---|

.asf |

yolo predict source=video.asf |

Advanced Systems Format |

.avi |

yolo predict source=video.avi |

Audio Video Interleave |

.gif |

yolo predict source=video.gif |

Graphics Interchange Format |

.m4v |

yolo predict source=video.m4v |

MPEG-4 Part 14 |

.mkv |

yolo predict source=video.mkv |

Matroska |

.mov |

yolo predict source=video.mov |

QuickTime File Format |

.mp4 |

yolo predict source=video.mp4 |

MPEG-4 Part 14 - Wikipedia |

.mpeg |

yolo predict source=video.mpeg |

MPEG-1 Part 2 |

.mpg |

yolo predict source=video.mpg |

MPEG-1 Part 2 |

.ts |

yolo predict source=video.ts |

MPEG Transport Stream |

.wmv |

yolo predict source=video.wmv |

Windows Media Video |

.webm |

yolo predict source=video.webm |

WebM Project |

Results

from ultralytics import YOLO

# Load a pretrained YOLO11n model

model = YOLO("yolo11n.pt")

# Run inference on an image

results = model("bus.jpg") # list of 1 Results object

results = model(["bus.jpg", "zidane.jpg"]) # list of 2 Results objectsResults Attributes

| Attribute | Type | Description |

|---|---|---|

orig_img |

numpy.ndarray |

The original image as a numpy array. |

orig_shape |

tuple |

The original image shape in (height, width) format. |

boxes |

Boxes, optional |

A Boxes object containing the detection bounding boxes. |

masks |

Masks, optional |

A Masks object containing the detection masks. |

probs |

Probs, optional |

A Probs object containing probabilities of each class for classification task. |

keypoints |

Keypoints, optional |

A Keypoints object containing detected keypoints for each object. |

obb |

OBB, optional |

An OBB object containing oriented bounding boxes. |

speed |

dict |

A dictionary of preprocess, inference, and postprocess speeds in milliseconds per image. |

names |

dict |

A dictionary of class names. |

path |

str |

The path to the image file. |

Object Methods

| Method | Return Type | Description |

|---|---|---|

update() |

None |

Update the boxes, masks, and probs attributes of the Results object. |

cpu() |

Results |

Return a copy of the Results object with all tensors on CPU memory. |

numpy() |

Results |

Return a copy of the Results object with all tensors as numpy arrays. |

cuda() |

Results |

Return a copy of the Results object with all tensors on GPU memory. |

to() |

Results |

Return a copy of the Results object with tensors on the specified device and dtype. |

new() |

Results |

Return a new Results object with the same image, path, and names. |

plot() |

numpy.ndarray |

Plots the detection results. Returns a numpy array of the annotated image. |

show() |

None |

Show annotated results to screen. |

save() |

None |

Save annotated results to file. |

verbose() |

str |

Return log string for each task. |

save_txt() |

None |

Save predictions into a txt file. |

save_crop() |

None |

Save cropped predictions to save_dir/cls/file_name.jpg. |

tojson() |

str |

Convert the object to JSON format. |

Boxes

Boxes object can be used to index, manipulate, and convert bounding boxes to different formats.

from ultralytics import YOLO

# Load a pretrained YOLO11n model

model = YOLO("yolo11n.pt")

# Run inference on an image

results = model("bus.jpg") # results list

# View results

for r in results:

print(r.boxes) # print the Boxes object containing the detection bounding boxesHere is a table for the Boxes class methods and properties, including their name, type, and description:

| Name | Type | Description |

|---|---|---|

cpu() |

Method | Move the object to CPU memory. |

numpy() |

Method | Convert the object to a numpy array. |

cuda() |

Method | Move the object to CUDA memory. |

to() |

Method | Move the object to the specified device. |

xyxy |

Property (torch.Tensor) |

Return the boxes in xyxy format. |

conf |

Property (torch.Tensor) |

Return the confidence values of the boxes. |

cls |

Property (torch.Tensor) |

Return the class values of the boxes. |

id |

Property (torch.Tensor) |

Return the track IDs of the boxes (if available). |

xywh |

Property (torch.Tensor) |

Return the boxes in xywh format. |

xyxyn |

Property (torch.Tensor) |

Return the boxes in xyxy format normalized by original image size. |

xywhn |

Property (torch.Tensor) |

Return the boxes in xywh format normalized by original image size. |

Masks

Masks object can be used index, manipulate and convert masks to segments.

from ultralytics import YOLO

# Load a pretrained YOLO11n-seg Segment model

model = YOLO("yolo11n-seg.pt")

# Run inference on an image

results = model("bus.jpg") # results list

# View results

for r in results:

print(r.masks) # print the Masks object containing the detected instance masksHere is a table for the Masks class methods and properties, including their name, type, and description:

| Name | Type | Description |

|---|---|---|

cpu() |

Method | Returns the masks tensor on CPU memory. |

numpy() |

Method | Returns the masks tensor as a numpy array. |

cuda() |

Method | Returns the masks tensor on GPU memory. |

to() |

Method | Returns the masks tensor with the specified device and dtype. |

xyn |

Property (torch.Tensor) |

A list of normalized segments represented as tensors. |

xy |

Property (torch.Tensor) |

A list of segments in pixel coordinates represented as tensors. |

Keypoints

Keypoints object can be used index, manipulate and normalize coordinates.

from ultralytics import YOLO

# Load a pretrained YOLO11n-pose Pose model

model = YOLO("yolo11n-pose.pt")

# Run inference on an image

results = model("bus.jpg") # results list

# View results

for r in results:

print(r.keypoints) # print the Keypoints object containing the detected keypointsHere is a table for the Keypoints class methods and properties, including their name, type, and description:

| Name | Type | Description |

|---|---|---|

cpu() |

Method | Returns the keypoints tensor on CPU memory. |

numpy() |

Method | Returns the keypoints tensor as a numpy array. |

cuda() |

Method | Returns the keypoints tensor on GPU memory. |

to() |

Method | Returns the keypoints tensor with the specified device and dtype. |

xyn |

Property (torch.Tensor) |

A list of normalized keypoints represented as tensors. |

xy |

Property (torch.Tensor) |

A list of keypoints in pixel coordinates represented as tensors. |

conf |

Property (torch.Tensor) |

Returns confidence values of keypoints if available, else None. |

Probs

Probs object can be used index, get top1 and top5 indices and scores of classification.

from ultralytics import YOLO

# Load a pretrained YOLO11n-cls Classify model

model = YOLO("yolo11n-cls.pt")

# Run inference on an image

results = model("bus.jpg") # results list

# View results

for r in results:

print(r.probs) # print the Probs object containing the detected class probabilitiesHere’s a table summarizing the methods and properties for the Probs class:

| Name | Type | Description |

|---|---|---|

cpu() |

Method | Returns a copy of the probs tensor on CPU memory. |

numpy() |

Method | Returns a copy of the probs tensor as a numpy array. |

cuda() |

Method | Returns a copy of the probs tensor on GPU memory. |

to() |

Method | Returns a copy of the probs tensor with the specified device and dtype. |

top1 |

Property (int) |

Index of the top 1 class. |

top5 |

Property (list[int]) |

Indices of the top 5 classes. |

top1conf |

Property (torch.Tensor) |

Confidence of the top 1 class. |

top5conf |

Property (torch.Tensor) |

Confidences of the top 5 classes. |

OBB

OBB object can be used to index, manipulate, and convert oriented bounding boxes to different formats.

from ultralytics import YOLO

# Load a pretrained YOLO11n model

model = YOLO("yolo11n-obb.pt")

# Run inference on an image

results = model("boats.jpg") # results list

# View results

for r in results:

print(r.obb) # print the OBB object containing the oriented detection bounding boxesHere is a table for the OBB class methods and properties, including their name, type, and description:

| Name | Type | Description |

|---|---|---|

cpu() |

Method | Move the object to CPU memory. |

numpy() |

Method | Convert the object to a numpy array. |

cuda() |

Method | Move the object to CUDA memory. |

to() |

Method | Move the object to the specified device. |

conf |

Property (torch.Tensor) |

Return the confidence values of the boxes. |

cls |

Property (torch.Tensor) |

Return the class values of the boxes. |

id |

Property (torch.Tensor) |

Return the track IDs of the boxes (if available). |

xyxy |

Property (torch.Tensor) |

Return the horizontal boxes in xyxy format. |

xywhr |

Property (torch.Tensor) |

Return the rotated boxes in xywhr format. |

xyxyxyxy |

Property (torch.Tensor) |

Return the rotated boxes in xyxyxyxy format. |

xyxyxyxyn |

Property (torch.Tensor) |

Return the rotated boxes in xyxyxyxy format normalized by image size. |

Plotting Results

The plot() method in Results objects facilitates visualization of predictions by overlaying detected objects (such as bounding boxes, masks, keypoints, and probabilities) onto the original image. This method returns the annotated image as a NumPy array, allowing for easy display or saving.

from PIL import Image

from ultralytics import YOLO

# Load a pretrained YOLO11n model

model = YOLO("yolo11n.pt")

# Run inference on 'bus.jpg'

results = model(["bus.jpg", "zidane.jpg"]) # results list

# Visualize the results

for i, r in enumerate(results):

# Plot results image

im_bgr = r.plot() # BGR-order numpy array

im_rgb = Image.fromarray(im_bgr[..., ::-1]) # RGB-order PIL image

# Show results to screen (in supported environments)

r.show()

# Save results to disk

r.save(filename=f"results{i}.jpg")Plot Parameters

The plot() method supports various arguments to customize the output:

| Argument | Type | Description | Default |

|---|---|---|---|

conf |

bool |

Include detection confidence scores. | True |

line_width |

float |

Line width of bounding boxes. Scales with image size if None. |

None |

font_size |

float |

Text font size. Scales with image size if None. |

None |

font |

str |

Font name for text annotations. | 'Arial.ttf' |

pil |

bool |

Return image as a PIL Image object. | False |

img |

numpy.ndarray |

Alternative image for plotting. Uses the original image if None. |

None |

im_gpu |

torch.Tensor |

GPU-accelerated image for faster mask plotting. Shape: (1, 3, 640, 640). | None |

kpt_radius |

int |

Radius for drawn keypoints. | 5 |

kpt_line |

bool |

Connect keypoints with lines. | True |

labels |

bool |

Include class labels in annotations. | True |

boxes |

bool |

Overlay bounding boxes on the image. | True |

masks |

bool |

Overlay masks on the image. | True |

probs |

bool |

Include classification probabilities. | True |

show |

bool |

Display the annotated image directly using the default image viewer. | False |

save |

bool |

Save the annotated image to a file specified by filename. |

False |

filename |

str |

Path and name of the file to save the annotated image if save is True. |

None |

color_mode |

str |

Specify the color mode, e.g., ‘instance’ or ‘class’. | 'class' |

Streaming Source

To optimize inference speed and manage memory efficiently, you can use the streaming mode by setting stream=True in the predictor’s call method. The streaming mode generates a memory-efficient generator of Results objects instead of loading all frames into memory. For processing long videos or large datasets, streaming mode is particularly useful.

Streaming For Loop

Here’s a Python script using OpenCV (cv2) and YOLO to run inference on video frames. This script assumes you have already installed the necessary packages (opencv-python and ultralytics).

This script will run predictions on each frame of the video, visualize the results, and display them in a window. The loop can be exited by pressing ‘q’.

import cv2

from ultralytics import YOLO

# Load the YOLO model

model = YOLO("yolo11n.pt")

# Open the video file

video_path = "path/to/your/video/file.mp4"

cap = cv2.VideoCapture(video_path)

# Loop through the video frames

while cap.isOpened():

# Read a frame from the video

success, frame = cap.read()

if success:

# Run YOLO inference on the frame

results = model(frame)

# Visualize the results on the frame

annotated_frame = results[0].plot()

# Display the annotated frame

cv2.imshow("YOLO Inference", annotated_frame)

# Break the loop if 'q' is pressed

if cv2.waitKey(1) & 0xFF == ord("q"):

break

else:

# Break the loop if the end of the video is reached

break

# Release the video capture object and close the display window

cap.release()

cv2.destroyAllWindows()