# create cnnvenv from command prompt, activate it

~\AI\ML\Stanford_Ng>py -m venv cnnvenv

~\AI\ML\Stanford_Ng>cnnvenv\Scripts\activate

# install the ipykernel so we can access cnnvenv from within the notebooks (jupyter)

(cnnvenv) ~\AI\ML\Stanford_Ng>pip install ipykernel

(cnnvenv) ~\AI\ML\Stanford_Ng>python -m ipykernel install --user --name=cnnvenv --display-name "CNN venv"

(cnnvenv) ~\AI\ML\Stanford_Ng>jupyter notebookCNN

Convolutional Neural Networks - We will cover the definition, basic setup of the environment, implementation of the foundational layers of CNNs and stack them properly in a deep network to solve multi-class image classification problems.

We will move on to pre-trained models in the next section. This section might be more detailed than usual but at the very least we get a general idea of what’s under the hood before we dive into projects.

Setup

This section will setup the environment we will need to work on this subject. I have covered it in other sections but I will include it here as well.

- Before we get started let’s setup a venv named: cnnvenv

- Reason being is I want to isolate all the cnn information from in one place

- Create the venv

- Activate it

- We install an ipykernel so we can access it from within jupyter notebook

- Register the cnnvenv Kernel and assign a name inside jupyter notebook to use

.

Definition

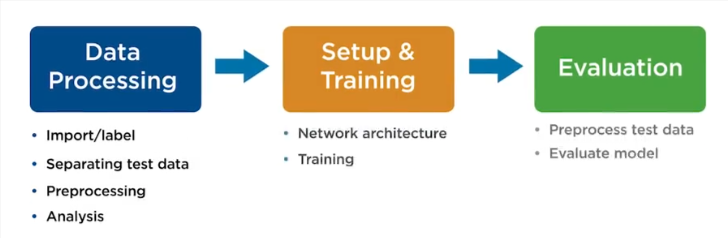

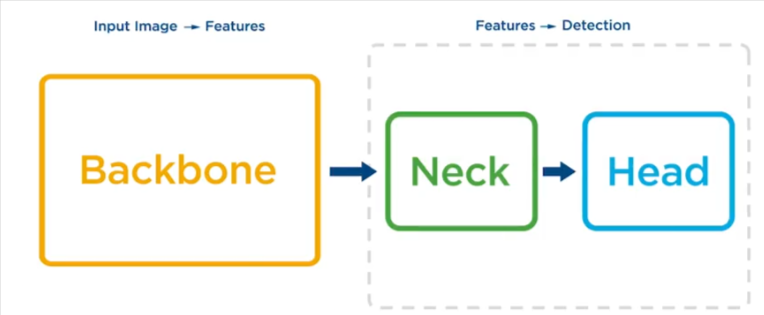

Classification is categorizing an image as a whole. Object detection is to precisely locate and recognize individual objects in a given image. Here is an overview of the process

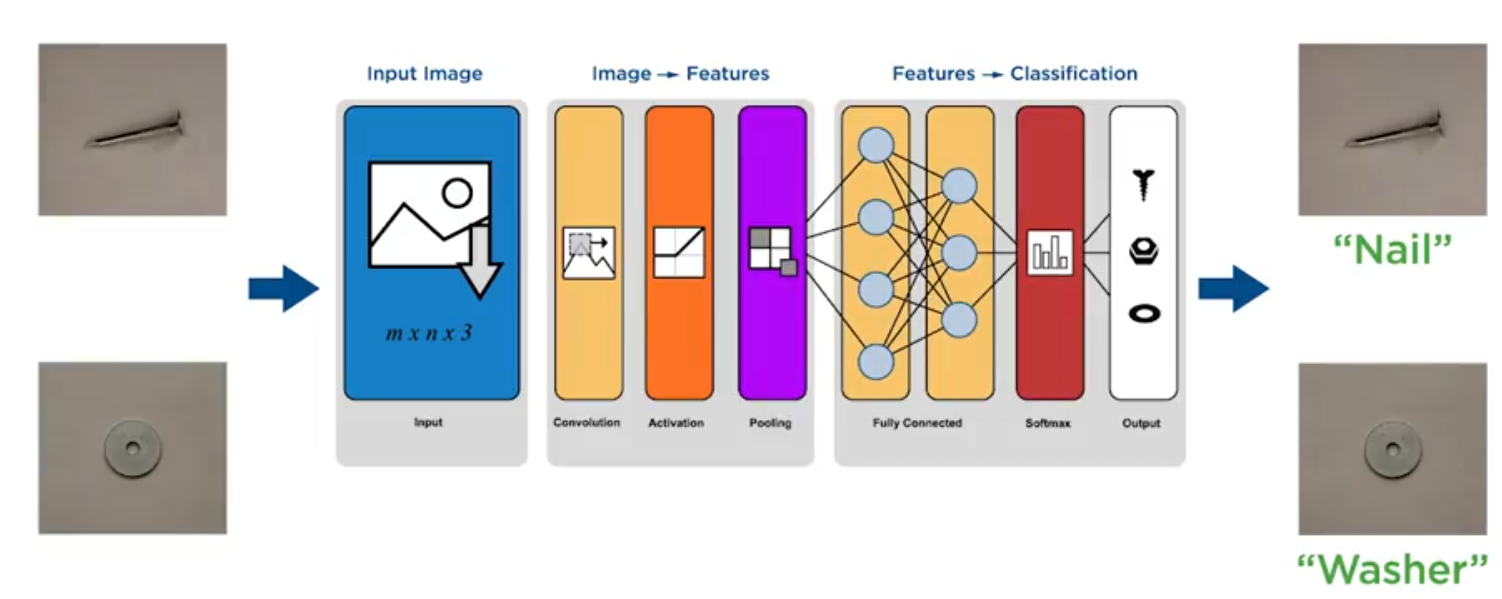

Image Classification

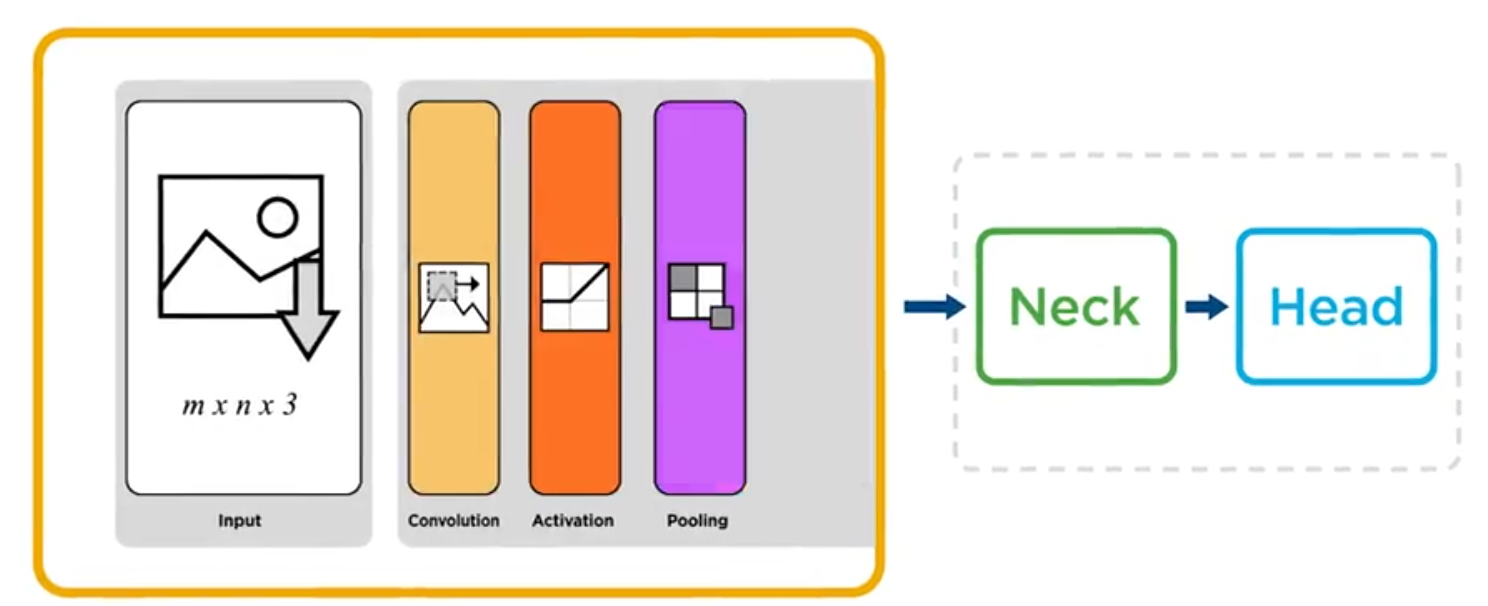

- Convolutional neural network or CNN-based classifiers, use a sequence of input layer, feature extraction network, and classification layers to accomplish this.

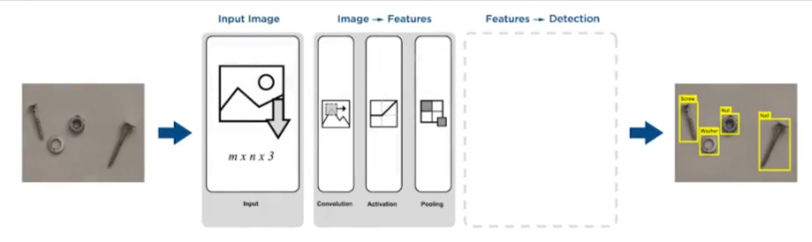

- For object detection, the goal is to take in unlabeled images which may have multiple objects of interest, and to determine the size and location as well as class label for each.

To do this, CNN-based object detection models use the same sequence of input layer and feature extraction network as classification models.

- This sequence, which goes from input to features, is referred to as the backbone in object detection models.

- In addition to the backbone, object detection models typically use a pair of networks called a neck and a head to move from features to detection results.

- The neck often involves operations to aggregate features taken from multiple scales within the backbone network.

- This helps detect objects over a wider range of sizes.

- The head is responsible for producing the object detection results, namely a bounding box and class label for each object.

The exact details of each of these networks can be quite complex and vary widely from one type of detection model to the next.

There are instead a few key things you will need to pay attention to.

- Which backbone network is used,

- the number and placement of neck attachments to the backbone network,

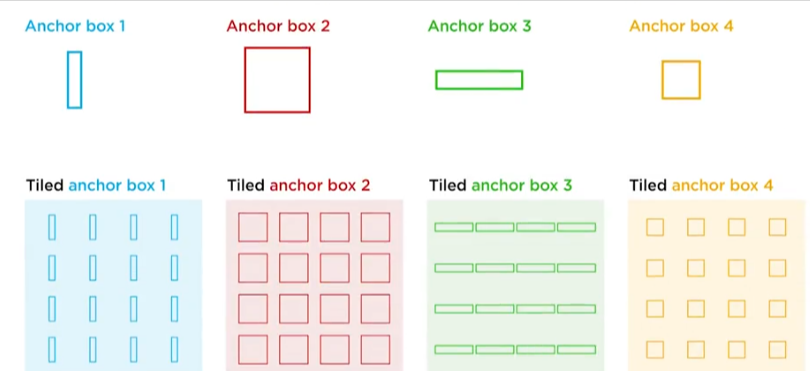

- and whether or not the framework uses anchor boxes.

Note that it is the neck and head architecture that defines a detection framework. Different backbone networks can be used within the same framework.

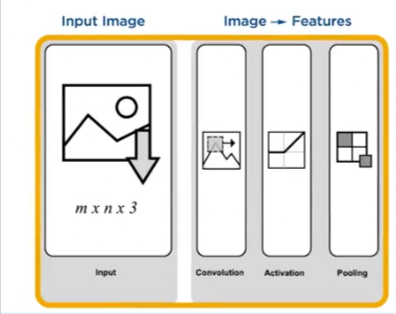

Backbone

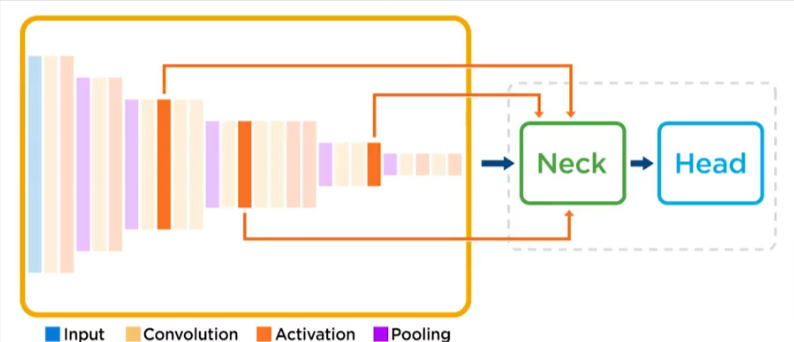

- A typical backbone has many layers in the feature extraction network, including several that down sample.

- For example, pooling layers where subareas of feature maps are aggregated.

Neck

- The neck is often pulling features from the backbone at multiple scales.

- These locations within the backbone are often referred to as neck or head attachments.

- Due to the down sampling, layers deeper in the backbone are extracting features based on progressively larger areas of the input.

- Generally, this means that larger objects will be better detected with head attachments deeper in the backbone.

- Similarly, smaller objects will be better detected with head attachments earlier in the backbone.

Anchor Boxes

Anchor boxes are predefined boxes of various sizes and aspect ratios that act as references for potential objects in an image. They are based on the available ground truth data.

CNN

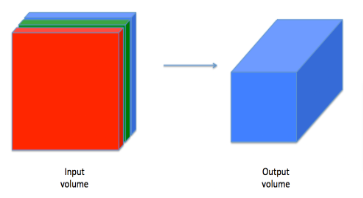

Although programming frameworks make convolutions easy to use, they remain one of the hardest concepts to understand in Deep Learning. A convolution layer transforms an input volume into an output volume of different size, as shown below.

Let’s talk more about it in the Edge Detection page