Generative AI

Generative AI refers to algorithms and models capable of generating new data instances that resemble the training data.

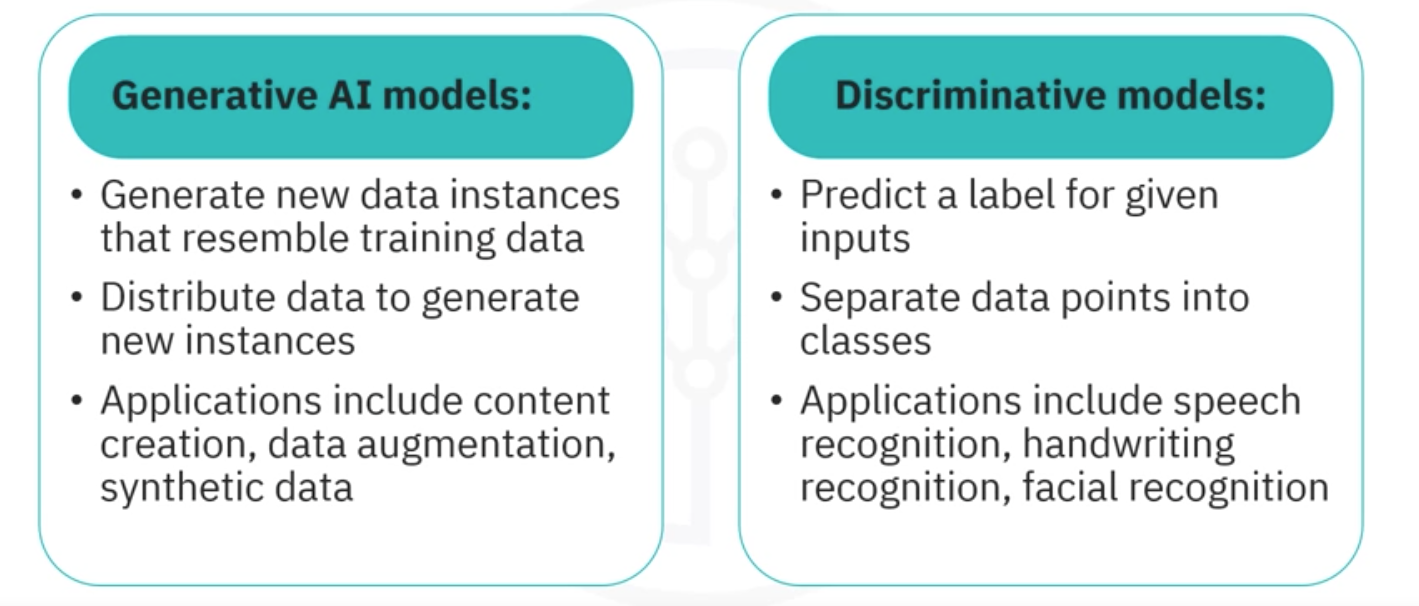

- Unlike discriminative models, which predict a label for given inputs, generative models can create data that has never been seen before.

- Generative models focus on the distribution of data to generate new instances, while discriminative models separate data points into classes.

- Various applications of generative AI include content creation, for example, images, music, text data augmentation, synthetic data for privacy, and more.

- Some applications of discriminative models are speech recognition, handwriting recognition, facial recognition, and so on.

- Generative AI uses some core models for generating new data.

GAN

Generative adversarial networks, GANs. GANs comprise two competing neural networks. One neural network generates new data instances from a given training data set, while the other network tries to predict whether the generated output belongs to the original data set.

Thus, the model generates new instances that are indistinguishable from real data.

VAE

Variational autoencoders, VAEs. These are models that learn to encode data into a compressed representation and then decode it back, often used for generating new data points.

Transformers

Generative AI transformers. Transformers, initially pivotal and advancing natural language processing, have extended their capabilities to generative AI, enabling the creation of sophisticated text, image, and mixed media content.

Their self-attention mechanisms allow for understanding and generating complex data patterns, revolutionizing creative and generative tasks across various domains.

Generative AI Uses

Data engineers are leveraging generative AI for their various tasks.

- Their core responsibilities include maintenance of data infrastructure. This involves designing, constructing, and maintaining robust scalable data architectures. The data architectures include databases, data warehouses, and data links that support the requirements of business applications and data analytics.

- Development of extract, transform, load (ETL) processes. This comprises developing efficient ETL pipelines that gather data from various sources, transform the data into a usable state, and load it into accessible structures.

- The data engineers are also responsible for the shift towards ELT processes with cloud computing. Compliance of data quality and governance, this includes implementation of measures to ensure data accuracy, consistency, and reliability. This involves setting up data governance frameworks, quality control measures, and data cataloging practices.

- Generative AI offers various capabilities that data engineers can use to streamline their tasks.

Features

- Synthetic data is artificially generated data that mimics real-world data, enabling the training of machine learning models where the actual data is scarce, sensitive, or exhibits bias.

- By creating diverse and representative data sets, synthetic data generation enhances model robustness, reduces bias, and improves fairness in AI applications.

- This approach addresses data privacy concerns, allowing researchers and developers to bypass the limitations of using sensitive or regulated data.

- Generative AI models such as GANs are pivotal in producing high-quality synthetic data that closely resemble the original data sets in statistical properties but does not carry the same privacy implications.

- Data anonymization involves modifying personal data to protect individual privacy while retaining the data’s usefulness for analysis. Generative AI enables the creation of anonymized datasets that preserve the statistical characteristics of the original data, ensuring compliance with data protection laws like General Data Protection Regulation, GDPR. This technique supports the ethical use of data by ensuring the individuals’ identities remain undisclosed, facilitating safer data sharing and collaboration across organizations. Anonymized data generated through AI can be used to train machine learning models, conduct research, and develop applications without compromising personal privacy.

- Improving data quality is crucial for accurate analytics and reliable machine-learning model performance. Generative AI plays a key role in identifying and correcting data anomalies and inconsistencies. Through pattern recognition and learning the normal distributions of data, generative models can flag outliers and suggest corrections, significantly enhancing data reliability. This process supports a more automated and precise approach to maintaining high-quality data standards, reducing the time and resources traditionally required for data cleaning and preprocessing.

- Data engineers use generative AI to augment existing datasets, especially in domains like computer vision and natural language processing, to improve the performance of predictive models. Generative AI can simulate various scenarios and data, aiding in risk management, strategic planning, and decision-making processes by providing insights into potential outcomes and their likelihoods.

- Data engineers can use generative AI to create realistic virtual environments for testing and development, generating textual content for chatbots, or producing synthetic imagery for training computer vision models.

Tools

TensorFlow and PyTorch

- They are open-source libraries for deep learning, offering extensive support for generative AI models including GANs, VAEs, and autoencoders.

- They are flexible with extensive community support and application in research and production environments.

Hugging Face transformers

- This comprises a library specializing in transformer models that has expanded to support generative tasks like text generation, image generation, and more.

- It has a user-friendly interface

- and pre-trained models that

- provide easy integration with TensorFlow and PyTorch.

Jupyter Google Colab

Development environments, Jupyter Notebooks and Google Colab are popular among data engineers for exploring data, prototyping models, and sharing results. They offer a mix of code, visual output, and documentation in a single interface facilitating collaboration and learning.

You can use AWS, GCP, Azure, and IBM Cloud for scalable AI services and resources as a cloud platform. You can leverage development environments like Jupyter Notebooks and Google Colab for interactive prototyping.

RunwayML for easy access to generative AI applications.

Use Cases

Data Source Identification and Assessment - Data Collection

- Automated data discovery:

GenAI models were trained on existing data and documentation to automatically identify and categorize potential data sources across the organization, saving time and effort compared to manual discovery.

- Automated data discovery:

Data Pipeline Development - Data Ingestion

- Code generation for data pipelines:

Based on the identified data sources and formats, GenAI models were used to generate code snippets for ETL pipelines, reducing development time and minimizing errors compared to manual coding.

- Code generation for data pipelines:

Data Quality Assurance

- Anomaly detection and correction:

GenAI models were trained on clean data samples to identify and flag data inconsistencies and anomalies within the incoming data stream, allowing for automated data cleansing and correction.

- Anomaly detection and correction:

Data Warehousing and Storage

- Schema optimization & prediction:

GenAI analyzed data usage patterns and predicted future data access needs to automatically recommend and optimize the data warehouse schema for efficient storage and retrieval.

- Schema optimization & prediction:

Data Access and Security

Synthetic data generation:

For providing secure access to sensitive data for analysis purposes, GenAI generated realistic synthetic data that preserved data distributions and relationships without revealing real user information.Data access control automation:

GenAI models assisted in defining and implementing user access controls based on roles and data sensitivity, ensuring data security and compliance with regulations.

Data processing

- Automated data cleansing:

Train GenAI models on clean data samples to automatically identify and correct inconsistencies and anomalies within the data stream. This can involve tasks like imputing missing values, correcting typos, and identifying and handling outliers, significantly reducing the manual effort required for data cleaning.

- Automated data cleansing:

Data integration

Schema alignment:

Leverage GenAI to analyze and suggest mappings between different data formats from diverse sources, facilitating seamless integration. This can be particularly useful when dealing with disparate data structures and formats.Synthetic data generation:

Generate synthetic data that reflects the structure and relationships of real data, facilitating integration while protecting sensitive information. This generation can be crucial for enabling data sharing and collaboration while adhering to data privacy regulations.

Data modeling

- Feature engineering suggestion:

Employ GenAI to analyze data and suggest potential features for inclusion in the data model. This analysis can involve identifying relationships between existing features, recommending feature transformations, and suggesting entirely new features based on the data, potentially improving model performance and accuracy.

- Feature engineering suggestion:

Data transformation

- Code generation for complex transformations:

Generate code snippets for complex data transformations based on user-defined rules or patterns learned from existing data. The code snippets can automate tasks like data normalization, aggregation, and feature creation, freeing up data engineers to focus on more complex data manipulation tasks.

- Code generation for complex transformations:

Data analysis

Data exploration and pattern discovery:

Train GenAI models to identify hidden patterns and relationships within the data, suggesting potential avenues for further analysis. This data exploration can involve tasks like anomaly detection, identifying correlations, and uncovering clusters, providing valuable insights that might be missed through traditional analysis methods.Automated report generation:

Generate preliminary reports with key insights based on predefined templates and data analysis results. The templates can automate the initial reporting stage, allowing data engineers to focus on refining the analysis and providing deeper interpretation of the findings.

Data visualization

- Automated chart suggestion:

Based on the data and analysis, suggest appropriate data visualization formats (for example, bar charts, scatter plots, heatmaps) to effectively communicate insights. The charts can help non-technical stakeholders understand complex data and make informed decisions.

- Automated chart suggestion:

Data governance and security

Synthetic data generation:

Generate synthetic data for user access and analysis, protecting sensitive information and adhering to data privacy regulations. The synthetic data allows broader access to data for analytics and decision-making while mitigating privacy risks.Automated data access control recommendation:

Use GenAI to analyze user roles and data sensitivity, suggesting appropriate access control policies. This analysis streamlines data governance processes and ensures that data is only accessible to authorized users based on their specific needs.

Monitoring and optimization

Anomaly detection in data pipelines:

Train GenAI models to monitor data pipelines and identify potential issues like errors or delays, facilitating proactive maintenance. This maintenance ensures the smooth flow of data and prevents disruptions in downstream processes.Performance optimization suggestions:

Analyze data processing and storage workflows with GenAI and recommend optimizations for faster and more efficient data handling. This data handling can involve identifying bottlenecks, suggesting alternative algorithms, and optimizing resource allocation, ultimately improving the overall efficiency of the data engineering process.

Considerations for Gen AI

- Maintain transparency and fairness in your AI system

- Ensure accountability in the deployment of AI chatbot

- Enhance the reliability of your AI model to ensure accurate product descriptions

Biases

You are developing a generative AI system that creates personalized content recommendations for users. The system seems to consistently recommend content that aligns with certain cultural and demographic biases.

Users from diverse backgrounds are expressing concern about the lack of transparency and fairness in the recommendations.

How do you maintain transparency and fairness in your AI system?

Consider steps like conducting a bias assessment, enhancing diversity in training data, implementing explainability features, and establishing a user feedback loop to ensure fairness and transparency in your AI system.

- Conduct a thorough bias assessment to identify and understand the biases present in the training data and algorithms.

- Use specialized tools or metrics to measure and quantify biases in content recommendations.

- Enhance the diversity of your training data by including a broader range of cultural, demographic, and user behavior data.

- Ensure that the training data reflects the diversity of your user base to reduce biases.

- Implement explainability features to provide users with insights into why specific recommendations are made.

- Offer transparency by showing the key factors and attributes influencing the recommendations.

- Establish a user feedback loop where users can report biased recommendations or provide feedback on content relevance.

- Regularly analyze this feedback to iteratively improve the system’s fairness.

Holistic AI Library: This open-source library offers a range of metrics and mitigation strategies for various AI tasks, including content recommendation. It analyzes data for bias across different dimensions and provides visualizations for clear understanding.

Accountability

Your company has deployed a chatbot powered by generative AI to interact with customers. The chatbot occasionally generates responses that are inappropriate or offensive, leading to customer dissatisfaction. As the AI developer, how do you take responsibility for these incidents and ensure accountability in the deployment of the AI chatbot?

To address responsibility and accountability, analyze errors, respond swiftly, continuously monitor for inappropriate responses, and communicate openly with stakeholders about corrective actions taken to improve the chatbot’s behavior.

- Conduct a detailed analysis of the inappropriate responses to identify patterns and root causes.

- Determine whether the issues stem from biased training data, algorithmic limitations, or other factors.

- Implement a mechanism to quickly identify and rectify inappropriate responses by updating the chatbot’s training data or fine-tuning the model.

- Communicate openly with affected customers, acknowledge the issue, and assure them of prompt corrective actions.

- Set up continuous monitoring systems to detect and flag inappropriate responses in real-time.

- Implement alerts or human-in-the-loop mechanisms to intervene when the system generates potentially harmful content.

- Clearly communicate the steps taken to address the issue to both internal stakeholders and customers.

- Emphasize the commitment to continuous improvement and the responsible use of AI technology.

Reliability

Your company has developed a generative AI model that autonomously generates product descriptions for an e-commerce platform. However, users have reported instances where the generated descriptions contain inaccurate information, leading to customer confusion and dissatisfaction. How do you enhance the reliability of your AI model to ensure accurate product descriptions?

To improve reliability, focus on quality assurance testing, use domain-specific training data, adopt an iterative model training approach, and integrate user feedback to iteratively correct errors and enhance the accuracy of the AI-generated product descriptions.

To improve the reliability of the AI model in generating product descriptions, consider the following actions:

- Implement rigorous quality assurance testing to evaluate the accuracy of the generated product descriptions.

- Create a comprehensive testing data set that covers a wide range of products and scenarios to identify and address inaccuracies.

- Ensure that the AI model is trained on a diverse and extensive data set specific to the e-commerce domain.

- Include product information from reputable sources to enhance the model’s understanding of accurate product details.

- Implement an iterative training approach to continuously update and improve the model based on user feedback and evolving product data.

- Regularly retrain the model to adapt to changes in the product catalog and user preferences.

- Encourage users to provide feedback on inaccurate product descriptions.

- Use this feedback to fine-tune the model, correct errors, and improve the overall reliability of the AI-generated content.

Important Prompts

Architecture design

| Task | Prompt |

|---|---|

| Generate data architecture design for a hospital network | Create a detailed data architecture design for a hospital network. |

| Add data modeling components for patient data | Create additional data modeling components for patient demographics, medical history, diagnosis, treatment, and quality standards. |

| Design to implement robust access controls, data privacy, and audit mechanisms | Create a design to include implementing robust access controls, encryption, and auditing mechanisms to protect patient data from unauthorized access or breaches. |

| Detailed data architecture design for a retail company’s customer relationship | Create a detailed data architecture design for a retail company’s customer relationship management system. |

| Generate unified customer profile | Create a unified customer profile with attributes such as demographics, purchase history, browsing behavior, preferences, and interactions. |

Database, data warehouse schema design

| Task | Prompt |

|---|---|

| Generate data warehouse schema for a fashion retail store | Create a detailed data warehouse schema for a fashion retail store that should contain: i) Employee data ii) Sales data iii) Inventory data iv) Customer profiles v) Seller information |

Data anonymization

| Task | Prompt |

|---|---|

| Anonymize names | Replace the entries under the ‘Name’ attribute of a data set with pseudonyms like “User_i” using Python. |

| Redact email addresses | Write a Python code to redact the entries under the attribute ‘Email’ in a data frame so that only the usernames and service provider’s first and last characters are visible. Rest all characters are replaced with the character ‘*’. |

| Generalize ages to decades | Write a Python code to generalize the entries under the attribute ‘Age’ of a data frame such that the exact number is converted into a generic range |

| Add noise to contact numbers | Write a Python code to add random noise of 5-digit length to a numerical attribute ‘Contact Number’ in a data frame with all ten-digit length values. |

Infrastructure setup

| Task | Prompt |

|---|---|

| Generate the e-commerce platform’s data infrastructure | Write Python code that performs the following tasks: Create the steps for the e-commerce platform to enhance its data infrastructure to handle the increase in traffic. Suggest the improvements in 1. Scalable storage 2. Better processing capabilities 3. Real-time analytics |

| Generate data lake infrastructure for a healthcare platform | Write Python code that performs the following tasks: Create the steps for a healthcare company to set up a data lake infrastructure that is capable of the following: 1. Big data management 2. Data ingestion from various sources 3. Data transformation 4. Data security and compliance with regulatory guidelines |

| Generate infrastructure to detect real-time fraudulent transactions for a financial firm | Write Python code that performs the following tasks: Create the steps for a financial firm to set up its infrastructure if they want to detect fraudulent transactions in real time. Suggest specifics in terms of: 1. Computing machinery 2. Feature engineering pipeline 3. Predictive modeling pipeline 4. Model deployment and monitoring |