~AI\computer_vision\od_projects\opencv_learn>venv_opencv\Scripts\activate

(venv_opencv) ~AI\computer_vision\od_projects\opencv_learn>jupyter notebookObject Tracking - GOTURN

We will track the location of an object and project the location will be in the subsequent frame.

Setup

# Install opencv-contrib-python along with opencv to access KCF. I had to uninstall opencv-python and reinstall as well

(venv_opencv) D:\AI\computer_vision\od_projects\opencv_learn>python -m pip install opencv-contrib-python# Import modules

import os

import sys

import cv2

import numpy as np

import matplotlib.pyplot as plt

from zipfile import ZipFile

from urllib.request import urlretrieve

from IPython.display import HTML

from matplotlib.animation import FuncAnimation

from IPython.display import YouTubeVideo, display, HTML

from base64 import b64encode

%matplotlib inline# Download Assets

def download_and_unzip(url, save_path):

print(f"Downloading and extracting assests....", end="")

# Downloading zip file using urllib package.

urlretrieve(url, save_path)

try:

# Extracting zip file using the zipfile package.

with ZipFile(save_path) as z:

# Extract ZIP file contents in the same directory.

z.extractall(os.path.split(save_path)[0])

print("Done")

except Exception as e:

print("\nInvalid file.", e)URL = r"https://www.dropbox.com/s/ld535c8e0vueq6x/opencv_bootcamp_assets_NB11.zip?dl=1"

asset_zip_path = os.path.join(os.getcwd(), "opencv_bootcamp_assets_NB11.zip")

# Download if assest ZIP does not exists.

if not os.path.exists(asset_zip_path):

download_and_unzip(URL, asset_zip_path).

Tracker Class in OpenCV

- BOOSTING

- MIL

- KCF

- CRST

- TLD

- Tends to recover from occulusions

- MEDIANFLOW

- Good for predictable slow motion

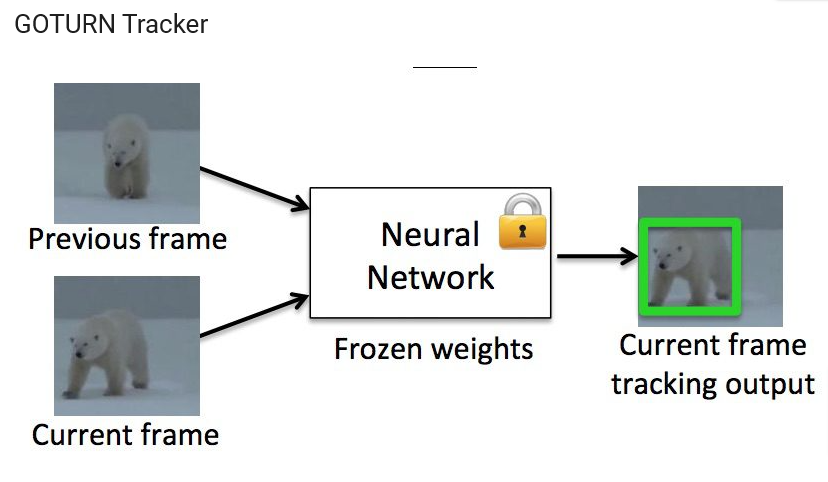

- GOTURN

Deep Learning based

Most Accurate

- MOSSE

- Fastest

video = YouTubeVideo("XkJCvtCRdVM", width=1024, height=640)

display(video)Note

- As the car comes down the first turn it is facing the camera so it’s pretty easy to extract the edges of the vehicle and draw the identifying box

- as the car moves right in front of the camera it becomes even easier to distinguish it from it’s surrounding

- remember the model will take it’s current position and project where it will be next so it can draw the bounding box

- as the car goes past the camera and starts taking the next turn the surface area available to the camera becomes smaller and the background becomes brighter

- it becomes harder for the model to keep up as the car to continue to move through the turn

- as you will see in the produced videos some models are better than others at predicting the bounding box

- I will focus on GOTURN and you’ll see why

Read Video

video_input_file_name = "race_car.mp4"def drawRectangle(frame, bbox):

p1 = (int(bbox[0]), int(bbox[1]))

p2 = (int(bbox[0] + bbox[2]), int(bbox[1] + bbox[3]))

cv2.rectangle(frame, p1, p2, (255, 0, 0), 2, 1)

def displayRectangle(frame, bbox):

plt.figure(figsize=(20, 10))

frameCopy = frame.copy()

drawRectangle(frameCopy, bbox)

frameCopy = cv2.cvtColor(frameCopy, cv2.COLOR_RGB2BGR)

plt.imshow(frameCopy)

plt.axis("off")

def drawText(frame, txt, location, color=(50, 170, 50)):

cv2.putText(frame, txt, location, cv2.FONT_HERSHEY_SIMPLEX, 1, color, 3)

Create Tracker Instance

I’ve listed more code below if you have a setup with more than one Tracker instance that you want to try

# Since we are only running GOTURN

tracker_type = "GOTURN"

tracker = cv2.TrackerGOTURN.create()# Set up tracker SKIP THIS FOR THIS EXAMPLE

tracker_types = [

"BOOSTING",

"MIL",

"KCF",

"CSRT",

"TLD",

"MEDIANFLOW",

"GOTURN",

"MOSSE",

]

# Change the index to change the tracker type

tracker_type = tracker_types[2]

if tracker_type == "BOOSTING":

tracker = cv2.legacy.TrackerBoosting.create()

elif tracker_type == "MIL":

tracker = cv2.legacy.TrackerMIL.create()

elif tracker_type == "KCF":

tracker = cv2.TrackerKCF.create()

elif tracker_type == "CSRT":

tracker = cv2.TrackerCSRT.create()

elif tracker_type == "TLD":

tracker = cv2.legacy.TrackerTLD.create()

elif tracker_type == "MEDIANFLOW":

tracker = cv2.legacy.TrackerMedianFlow.create()

elif tracker_type == "GOTURN":

tracker = cv2.TrackerGOTURN.create()

else:

tracker = cv2.legacy.TrackerMOSSE.create()Read & Output Videos

# Read video

video = cv2.VideoCapture(video_input_file_name)

ok, frame = video.read()

# Exit if video not opened

if not video.isOpened():

print("Could not open video")

sys.exit()

else:

width = int(video.get(cv2.CAP_PROP_FRAME_WIDTH))

height = int(video.get(cv2.CAP_PROP_FRAME_HEIGHT))

video_output_file_name = "race_car-" + tracker_type + ".mp4"

video_out = cv2.VideoWriter(video_output_file_name, cv2.VideoWriter_fourcc(*"XVID"), 10, (width, height))

video_output_file_name

# OUTPUT

'race_car-GOTURN.mp4'Define Bounding Box

Draw Box

- Here we simply take the first frame from the frame created above after reading the video and draw the box

- Note: here we manually give the box coordinates, but that’s not practical, we want the model to detect the center and draw the box automatically (why else are we going through this, right?)

# Define a bounding box

bbox = (1300, 405, 160, 120)

# bbox = cv2.selectROI(frame, False)

# print(bbox)

displayRectangle(frame, bbox)

Initialize Tracker

# Initialize tracker with first frame and bounding box

ok = tracker.init(frame, bbox)Read Frame & Track

while True:

ok, frame = video.read()

if not ok:

break

# Start timer

timer = cv2.getTickCount()

# Update tracker

ok, bbox = tracker.update(frame)

# Calculate Frames per second (FPS)

fps = cv2.getTickFrequency() / (cv2.getTickCount() - timer)

# Draw bounding box

if ok:

drawRectangle(frame, bbox)

else:

drawText(frame, "Tracking failure detected", (80, 140), (0, 0, 255))

# Display Info

drawText(frame, tracker_type + "-Version Tracker", (80, 60))

drawText(frame, "FPS : " + str(int(fps)), (80, 100))

# Write frame to video

video_out.write(frame)

video.release()

video_out.release()FFMPEG

FFmpeg is an open source media tool you can use to convert any video format into the one you need.

Install

- Go to source

- Download zip file to computer

- Unzip to: ~\Program Files\ffmpeg

- Go into settings and set path to folder: ~\Program Files\ffmpeg\bin

- Open terminal

~>ffmpeg

ffmpeg version N-118315-g4f3c9f2f03-20250115 Copyright (c) 2000-2025 the FFmpeg developers

built with gcc 14.2.0 (crosstool-NG 1.26.0.120_4d36f27)

configuration: --prefix=/ffbuild/prefix --pkg-config-flags=--static --pkg-config=pkg-config --cross-prefix=x86_64-w64-mingw32- --arch=x86_64 --target-os=mingw32 --enable-gpl --enable-version3 --disable-debug --enable-shared --disable-static --disable-w32threads --enable-pthreads --enable-iconv --enable-zlib --enable-libfreetype --enable-libfribidi --enable-gmp --enable-libxml2 --enable-lzma --enable-fontconfig --enable-libharfbuzz --enable-libvorbis --enable-opencl --disable-libpulse --enable-libvmaf --disable-libxcb --disable-xlib --enable-amf --enable-libaom --enable-libaribb24 --enable-avisynth --enable-chromaprint --enable-libdav1d --enable-libdavs2 --enable-libdvdread --enable-libdvdnav --disable-libfdk-aac --enable-ffnvcodec --enable-cuda-llvm --enable-frei0r --enable-libgme --enable-libkvazaar --enable-libaribcaption --enable-libass --enable-libbluray --enable-libjxl --enable-libmp3lame --enable-libopus --enable-librist --enable-libssh --enable-libtheora --enable-libvpx --enable-libwebp --enable-libzmq --enable-lv2 --enable-libvpl --enable-openal --enable-libopencore-amrnb --enable-libopencore-amrwb --enable-libopenh264 --enable-libopenjpeg --enable-libopenmpt --enable-librav1e --enable-librubberband --enable-schannel --enable-sdl2 --enable-libsnappy --enable-libsoxr --enable-libsrt --enable-libsvtav1 --enable-libtwolame --enable-libuavs3d --disable-libdrm --enable-vaapi --enable-libvidstab --enable-vulkan --enable-libshaderc --enable-libplacebo --disable-libvvenc --enable-libx264 --enable-libx265 --enable-libxavs2 --enable-libxvid --enable-libzimg --enable-libzvbi --extra-cflags=-DLIBTWOLAME_STATIC --extra-cxxflags= --extra-libs=-lgomp --extra-ldflags=-pthread --extra-ldexeflags= --cc=x86_64-w64-mingw32-gcc --cxx=x86_64-w64-mingw32-g++ --ar=x86_64-w64-mingw32-gcc-ar --ranlib=x86_64-w64-mingw32-gcc-ranlib --nm=x86_64-w64-mingw32-gcc-nm --extra-version=20250115

libavutil 59. 55.100 / 59. 55.100

libavcodec 61. 31.101 / 61. 31.101

libavformat 61. 9.106 / 61. 9.106

libavdevice 61. 4.100 / 61. 4.100

libavfilter 10. 6.101 / 10. 6.101

libswscale 8. 13.100 / 8. 13.100

libswresample 5. 4.100 / 5. 4.100

libpostproc 58. 4.100 / 58. 4.100

Universal media converter

usage: ffmpeg [options] [[infile options] -i infile]... {[outfile options] outfile}...

Use -h to get full help or, even better, run 'man ffmpeg'Had a lot of problems making ffmpeg to work from within the venv using jupyter notebook till I finally pointed to the ffmpeg.exe file using the direct full path, even though the path is setup in my environments and can be accessed readily from command prompt and python.

Anyways

Convert

Method 1

- As you see in the comments, I used crf=24 the default value which gives us a size of 5700KB

- The original size of the input video is 8600KB

# to set encoding to H.264 use: -c:v libx264

# -crf 18 sets the quality of the video encoding, lower value better quality but larger size 0-51 (23 default for x264). Adjusting by +-6 will double or half the size. Here using 18>10000KB while default of crf=5700KB

# -c:a copy copies the audio stream from the input file without re-encoding it

# -c:a aac is the audio codec commonly used with H.264 if you wish to re-encode it (our clip is silent so just copy or ignore)

import ffmpeg

ffmpeg_path = r"C:\Program Files\ffmpeg\bin\ffmpeg.exe"

input_video = 'race_car-GOTURN.mp4'

output_video = 'race_car_track_x264.mp4'

stream = ffmpeg.input(input_video)

stream = ffmpeg.output(stream, output_video, vcodec="libx264", crf=24, acodec="copy")

ffmpeg.run(stream, cmd=ffmpeg_path)Method 2

import ffmpeg

ffmpeg_path = r"C:\Program Files\ffmpeg\bin\ffmpeg.exe"

input_file = 'race_car-GOTURN.mp4'

output_file = 'blah2.mp4'

try:

ffmpeg.input(input_file).output(output_file, vcodec="libx264").run(ffmpeg_path)

print(f"Successfully converted {input_file} to {output_file}")

except ffmpeg.Error as e:

print(f"An error occurred: {e}")Display GOTURN

mp4 = open("race_car_track_x264.mp4", "rb").read()

data_url = "data:video/mp4;base64," + b64encode(mp4).decode()

HTML(f"""<video width=1024 controls><source src="{data_url}" type="video/mp4"></video>""")Display CSRT

Convert CSRT

import ffmpeg

ffmpeg_path = r"C:\Program Files\ffmpeg\bin\ffmpeg.exe"

input_video = 'race_car-CSRT.mp4'

output_video = 'race_car_track-CSRT_x264.mp4'

stream = ffmpeg.input(input_video)

stream = ffmpeg.output(stream, output_video, vcodec="libx264", crf=24, acodec="copy")

ffmpeg.run(stream, cmd=ffmpeg_path)